Release 88.2: Flink Consumers and Encrypted Configuration

Kpow v88.2 introduces improved support for monitoring Flink consumers, the ability to encrypt your Kpow configuration to avoid passwords in plaintext, new configuration options for connecting to Confluent Schema Registries that require mutual TLS for authentication, and more.

Release 88.1: A New Release Model

Kpow v88.1 is the first release to follow our new Major.Minor release model.

Release 88: Message Header Production and Protobuf Improvements

Kpow v88 features support for producing with message headers, protobuf referenced schemas, a new topic partition increase function, and much more.

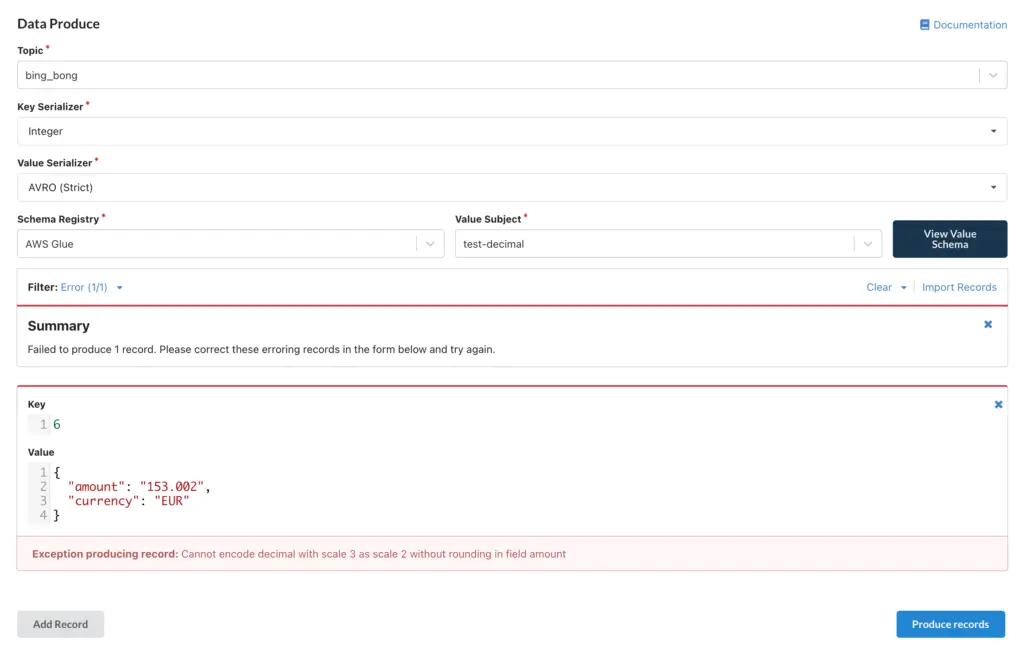

Release 87: AVRO Decimal Logical Type Support

Kpow v87 features support for AVRO Decimal Logical Type fields in both Data Inspect and Data Produce, improved Data Import UX for CSV message upload, and support for RHOSAK.

Release 86: Compute Performance and AWS Glue UX

Kpow v86 features improved Kpow snapshot compute performance and an updated Schema UI that shows AWS Glue schema status.

Release 85: Data Masking Improvements

Kpow v85 features improved Data Masking support and a new ConsumerOffsets serde in Data Inspect.

Join the Factor Community

We’re building more than products, we’re building a community. Whether you're getting started or pushing the limits of what's possible with Kafka and Flink, we invite you to connect, share, and learn with others.