Integrate Kpow with Google Managed Schema Registry

%20(1).webp)

Table of contents

Overview

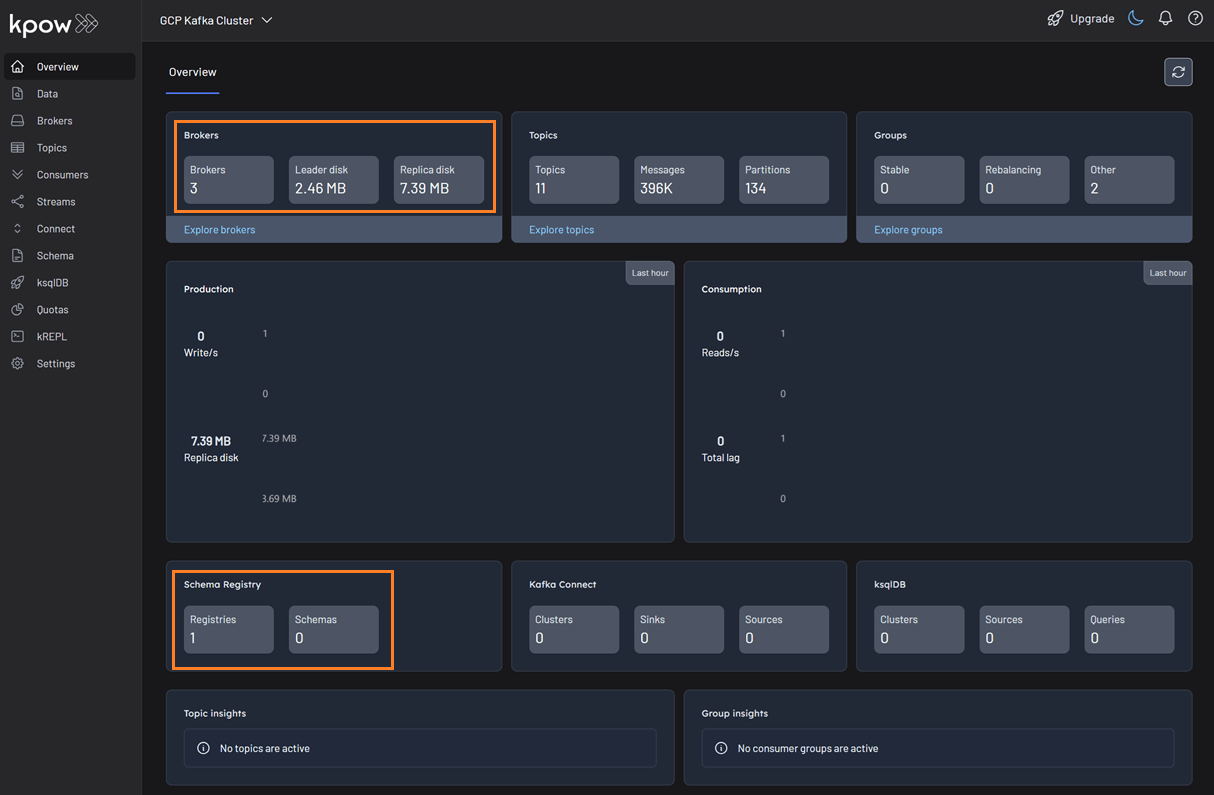

Google Cloud has enhanced its platform with the launch of a managed Schema Registry for Apache Kafka, a critical service for ensuring data quality and schema evolution in streaming architectures. Kpow 94.3 expands its support for Google Managed Service for Apache Kafka by integrating the managed schema registry. This allows users to manage Kafka clusters, topics, consumer groups, and schemas from a single interface.

Building on our earlier setup guide, this post details how to configure the new schema registry integration and demonstrates how to leverage the Kpow UI for working effectively with Avro schemas.

About Factor House

Factor House is a leader in real-time data tooling, empowering engineers with innovative solutions for Apache Kafka® and Apache Flink®.

Our flagship product, Kpow for Apache Kafka, is the market-leading enterprise solution for Kafka management and monitoring.

Start your free 30-day trial or explore our live multi-cluster demo environment to see Kpow in action.

Prerequisites

In this tutorial, we will use the Community Edition of Kpow, where the default user has all the necessary permissions to complete the tasks. For those using the Kpow Enterprise Edition with user authorization enabled, the logged-in user must have the SCHEMA_CREATE permission for Role-Based Access Control or have ALLOW_SCHEMA_CREATE=true set for Simple Access Control. More information can be found in the Kpow User Authorization documentation.

We also assume that a Managed Kafka cluster has already been created, as detailed in the earlier setup guide. This cluster will serve as the foundation for the configurations and operations covered in this tutorial.

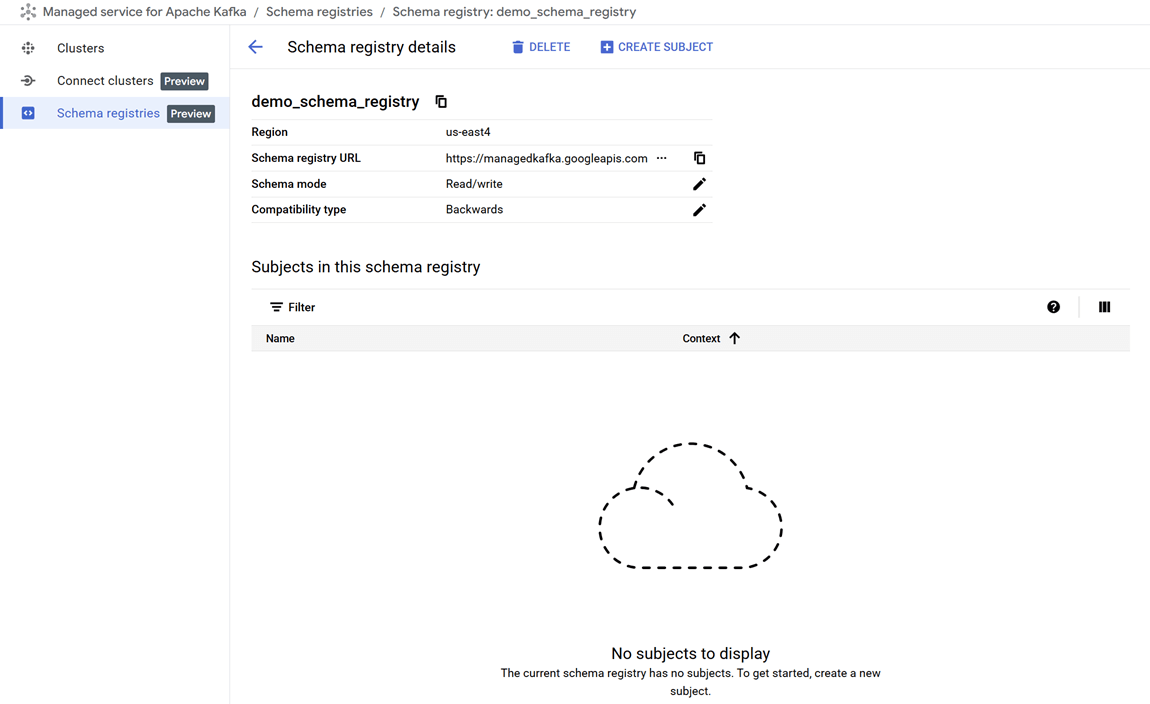

Create a Google Managed Schema Registry

We can create a schema registry using the gcloud beta managed-kafka schema-registries create command as shown below.

gcloud compute instances create kafka-test-instance \

--scopes=https://www.googleapis.com/auth/cloud-platform \

--tags=http-server \

--subnet=projects/$PROJECT_ID/regions/$REGION/subnetworks/default \

--zone=$REGION-aOnce the command completes, we can verify that the new registry, demo_schema_registry, is visible in the GCP Console under the Kafka services.

Set up a client VM

The default service account used by the client VM is granted the following roles. While these roles provide Kpow with administrative access, user-level permissions can still be controlled using User Authorization - an enterprise-only feature:

- Managed Kafka Admin: Grants full access to manage Kafka topics, configurations, and access controls in GCP’s managed Kafka environment.

- Schema Registry Admin: Allows registering, evolving, and managing schemas and compatibility settings in the Schema Registry.

To connect to the Kafka cluster, Kpow must run on a machine with network access to it. In this setup, we use a Google Cloud Compute Engine VM that must be in the same region, VPC, and subnet as the Kafka cluster. We also attach the http-server tag to allow HTTP traffic, enabling browser access to Kpow’s UI.

We can create the client VM using the following command:

gcloud compute instances create kafka-test-instance \ --scopes=https://www.googleapis.com/auth/cloud-platform \ --tags=http-server \ --subnet=projects/$PROJECT_ID/regions/$REGION/subnetworks/default \ --zone=$REGION-a

Launch a Kpow Instance

Once our client VM is up and running, we'll connect to it using the SSH-in-browser tool provided by Google Cloud. After establishing the connection, the first step is to install Docker Engine, as Kpow will be launched using Docker. Refer to the official installation and post-installation guides for detailed instructions.

Preparing Kpow Configuration

To get Kpow running with a Google Cloud managed Kafka cluster and its schema registry, we prepare a configuration file (gcp-trial.env) that defines all necessary connection and authentication settings, as well as the Kpow license details.

The configuration is divided into three main parts: Kafka cluster connection, schema registry integration, and license activation.

## Kafka Cluster Configuration

ENVIRONMENT_NAME=GCP Kafka Cluster

BOOTSTRAP=bootstrap.<cluster-id>.<gcp-region>.managedkafka.<gcp-project-id>.cloud.goog:9092

SECURITY_PROTOCOL=SASL_SSL

SASL_MECHANISM=OAUTHBEARER

SASL_LOGIN_CALLBACK_HANDLER_CLASS=com.google.cloud.hosted.kafka.auth.GcpLoginCallbackHandler

SASL_JAAS_CONFIG=org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginModule required;

## Schema Registry Configuration

SCHEMA_REGISTRY_NAME=GCP Schema Registry

SCHEMA_REGISTRY_URL=https://managedkafka.googleapis.com/v1/projects/<gcp-project-id>/locations/<gcp-region>/schemaRegistries/<registry-id>

SCHEMA_REGISTRY_BEARER_AUTH_CUSTOM_PROVIDER_CLASS=com.google.cloud.hosted.kafka.auth.GcpBearerAuthCredentialProvider

SCHEMA_REGISTRY_BEARER_AUTH_CREDENTIALS_SOURCE=CUSTOM

## Your License Details

LICENSE_ID=<license-id>

LICENSE_CODE=<license-code>

LICENSEE=<licensee>

LICENSE_EXPIRY=<license-expiry>

LICENSE_SIGNATURE=<license-signature>In the Kafka Cluster Configuration section, the ENVIRONMENT_NAME variable sets a friendly label visible in the Kpow user interface. The BOOTSTRAP variable specifies the Kafka bootstrap server address, incorporating the cluster ID, Google Cloud region, and project ID.

Authentication and secure communication are handled via SASL over SSL using OAuth tokens. The SASL_MECHANISM is set to OAUTHBEARER, enabling OAuth-based authentication. The class GcpLoginCallbackHandler automatically manages OAuth tokens using the VM's service account or a specified credentials file, simplifying token management and securing Kafka connections.

The Schema Registry Configuration section integrates Kpow with Google Cloud's managed Schema Registry service. The SCHEMA_REGISTRY_NAME is a descriptive label for the registry in Kpow. The SCHEMA_REGISTRY_URL points to the REST API endpoint for the schema registry; placeholders must be replaced with the actual project ID, region, and registry ID.

For authentication, Kpow uses Google's GcpBearerAuthCredentialProvider to acquire OAuth2 tokens when accessing the schema registry API. Setting SCHEMA_REGISTRY_BEARER_AUTH_CREDENTIALS_SOURCE to CUSTOM tells Kpow to use this provider, allowing seamless and secure schema fetch and management with Google Cloud's identity controls.

Finally, the License Details section contains essential license parameters required to activate and run Kpow.

Launching Kpow

Once the gcp-trial.env file is ready, we can launch Kpow using Docker. The command below pulls the latest Community Edition image, loads the environment config, and binds port 3000 (Kpow UI) to port 80 on the host VM. This allows us to access the Kpow UI directly in the browser at http://<vm-external-ip>:

docker run --pull=always -p 80:3000 --name kpow \

--env-file gcp-trial.env -d factorhouse/kpow-ce:latest

Schema Management

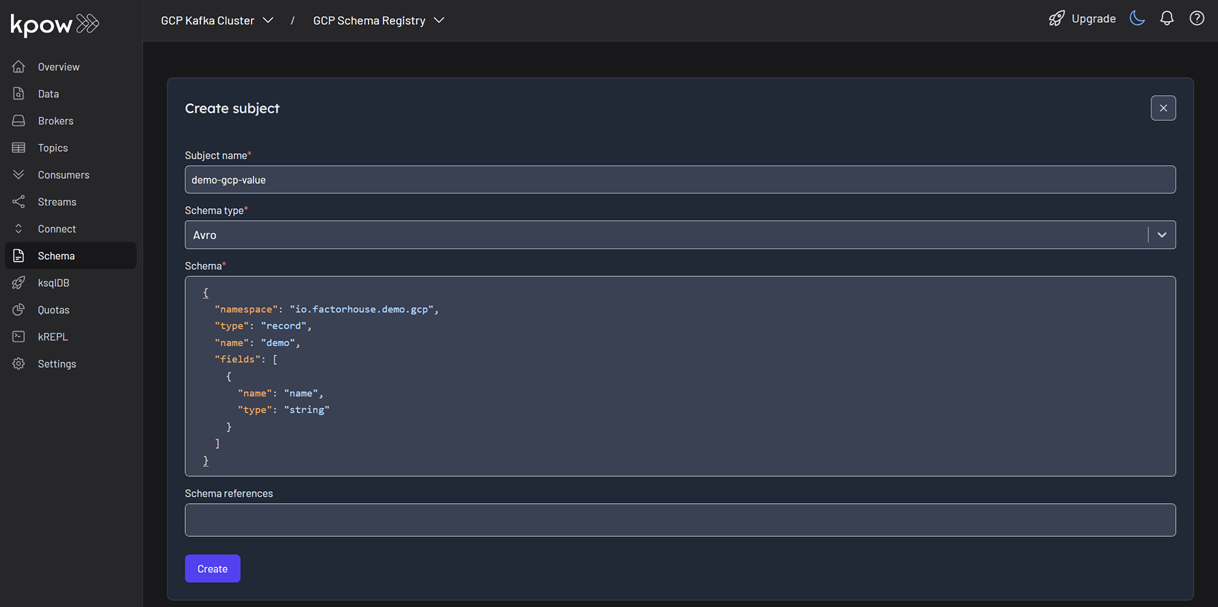

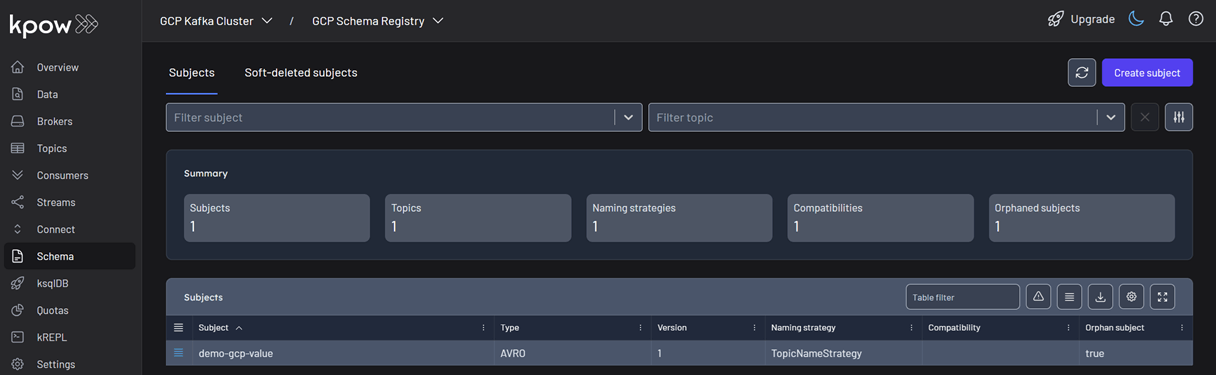

With our environment up and running, we can use Kpow to create a new schema subject in the GCP Schema Registry.

- In the Schema menu, click Create subject.

- Since we only have one registry configured, the GCP Schema Registry is selected by default.

- Enter a subject name (e.g.,

demo-gcp-value), chooseAVROas the type, and provide a schema definition. Click Create.

Once created, the new subject appears in the Schema menu within Kpow. This allows us to easily view, manage, and interact with the schema.

Working with Avro Data

Next, we'll produce and inspect an Avro record that is validated against the schema we just created.

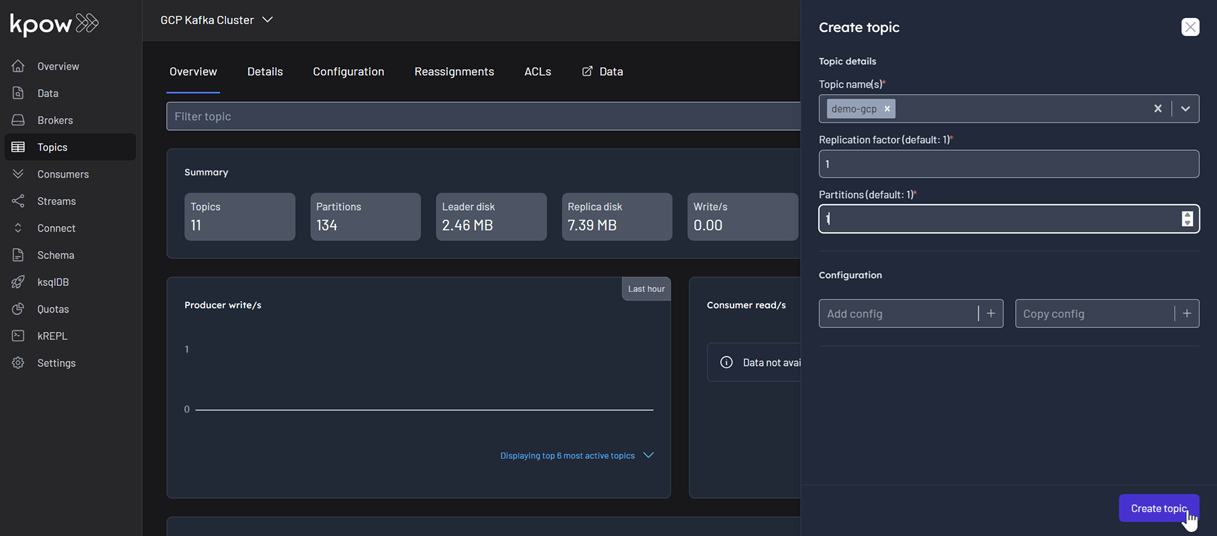

First, create a new topic named demo-gcp from the Kpow UI.

Now, to produce a record to the demo-gcp topic:

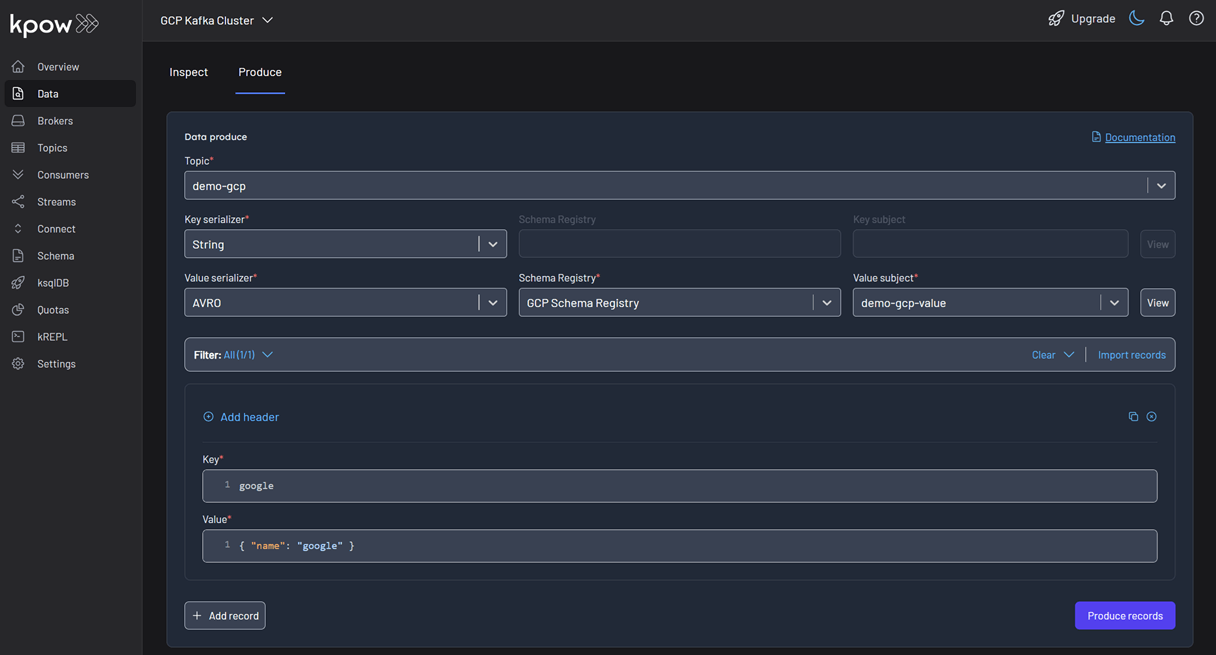

- Go to the Data menu, select the topic, and open the Produce tab.

- Select

Stringas the Key Serializer - Set the Value Serializer to

AVRO. - Choose GCP Schema Registry as the Schema Registry.

- Select the

demo-gco-valuesubject. - Enter key/value data and click Produce.

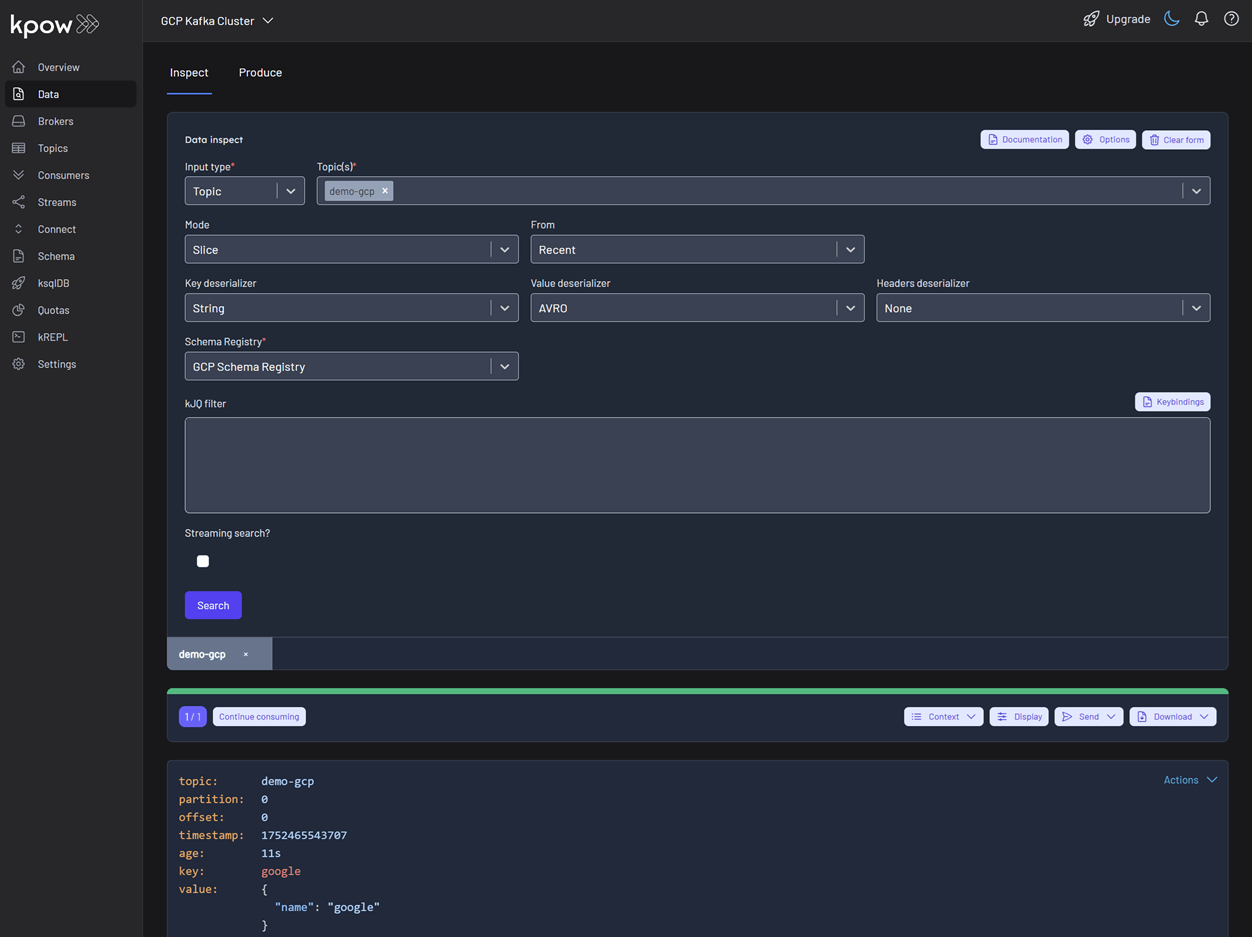

To see the result, navigate back to the Inspect tab and select the demo-gcp topic. In the deserializer options, choose String as the Key deserializer and AVRO as the Value deserializer, then select GCP Schema Registry. Kpow automatically fetches the correct schema version, deserializes the binary Avro message, and presents the data as easy-to-read JSON.

Tip: Kpow 94.3 introduces automatic deserialization of keys and values. For users unfamiliar with a topic's data format, selecting Auto lets Kpow attempt to infer and deserialize the records automatically as they are consumed.

Conclusion

Integrating Kpow with Google Cloud's Managed Schema Registry consolidates our entire Kafka management workflow into a single, powerful platform. By following this guide, we have seen how to configure Kpow to securely connect to both GCP Managed Kafka and the Schema Registry using native OAuth authentication, completely removing the need for manual token handling.

The result is a seamless, end-to-end experience where we can create and manage schemas, produce and consume schema-validated data, and inspect the records—all from the Kpow UI. This powerful combination streamlines development, enhances data governance, and empowers engineering teams to fully leverage Google Cloud's managed Kafka services.