Clarity and Control

Over Real-Time Data

Enterprise-grade management, observability and governance for Apache Kafka® and Apache Flink®.

Discover our products

Equip your engineers with the tools they need to build real-time systems with confidence.

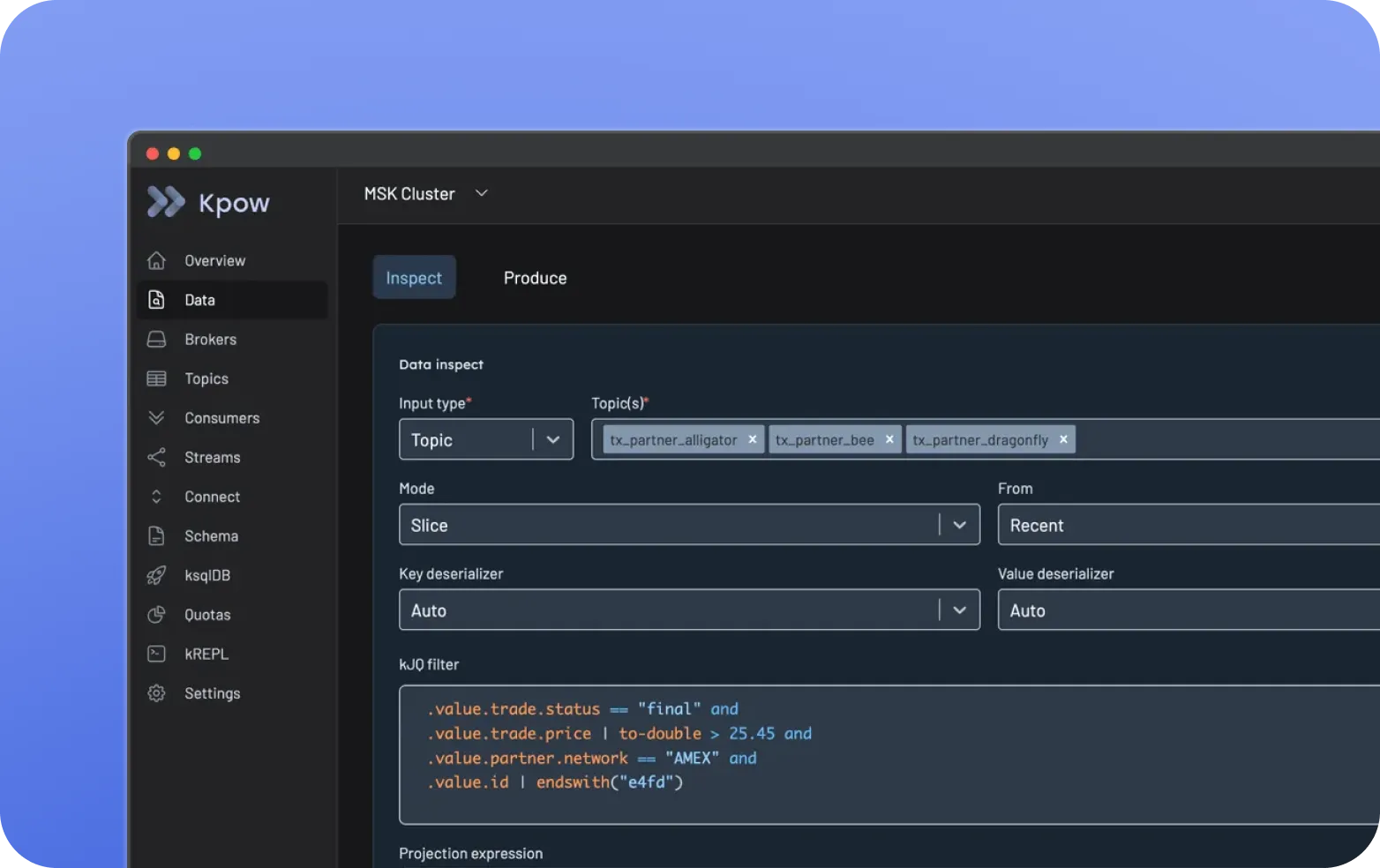

Explore and inspect Kafka data - blazing fast multi-topic search reduces time to resolution of production issues.

Control key Kafka components - manage their complete lifecycles.

Monitor and analyze - cluster health, broker status, topic lag and throughput.

Explore and inspect Kafka data

Control Kafka resources

Monitor and analyze

Visualize Flink job execution - navigate, view, inspect, and trace.

Manage and complete Flink job lifecycles - from submission with custom configurations to cancellation.

Diagnose & optimize stream pipelines - analyze, detect, and fine-tune pipelines

Visualize Flink job execution

Manage and interact with Flink API

Diagnose & optimize stream pipelines

Command Kafka, Flink and more from a single UI - centralized control of multi-cluster, multi-tech environments.

Operate securely at enterprise scale - fine-grained permissions for compliance and governance.

Gain end-to-end visibility with data lineage - visualize the complete flow of data across your real-time ecosystem.

Command Kafka, Flink and More from a single UI

Operate securely at Enterprise Scale

Enhance your real-time compatibility

Why Factor House

Engineer first; Enterprise always.

Built for Technical Teams

Powerful engineer-focused tooling for Kafka and Flink, enabling full observability and management of your streaming ecosystem.

Enterprise‑Ready by Design

Embedded enterprise features to scale with confidence. Retain control of security, governance, auditing, and access control.

.png)

Truly Flexible, Vendor-Agnostic

Our self-managed, flexible deployment model adapts to your streaming strategy and supports Kafka and Flink vendors like MSK, Confluent, Aiven, Ververica, and more.

Proven in Production

Trusted by Fortune 500 companies. Our blue-chip clientele relies on our tech to manage their transactions, fraud, logistics, and user experience.

Designed to accelerate your team

Powering engineers, dev ops and data teams with unmatched performance

50k

50k

50k

4M+

50k

4M+

72%

50k

72%

Governance and compliance

Perform critical operations with certainty. Factor House products are designed for enterprise environments where governance, security, and auditable action are non-negotiable.

All Factor House products integrate seamlessly with Okta, OpenID, LDAP, SAML, Keycloak, HTTPS, and include Data Masking, Audit Logging, Prometheus end-points as standard. Accessibility is fully compliant with WCAG 2.1 AA standards.

Developer Knowledge Center

Explore hands-on guides, product updates, and technical insights to get the most out of Kafka, Flink, and Factor House solutions.

Release 95.3: Memory leak fix for in-memory compute users

95.3 fixes a memory leak in our in-memory compute implementation, reported by our customers.

Release 95.2: quality-of-life improvements across Kpow, Flex & Helm deployments

95.2 focuses on refinement and operability, with improvements across the UI, consumer group workflows, and deployment configuration. Alongside bug fixes and usability improvements, this release adds new Helm options for configuring the API and controlling service account credential automounting.

Integrate Kpow with Oracle Compute Infrastructure (OCI) Streaming with Apache Kafka

Unlock the full potential of your dedicated OCI Streaming with Apache Kafka cluster. This guide shows you how to integrate Kpow with your OCI brokers and self-hosted Kafka Connect and Schema Registry, unifying them into a single, developer-ready toolkit for complete visibility and control over your entire Kafka ecosystem.

Try it for yourself

Full access to Kpow and Flex for 30 days with a single license. No credit card required.

.png)

.png)

.png)

%201.png)

%201.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

%20(1).png)

%20(1).png)

.png)