Developer

Knowledge Center

Empowering engineers with everything they need to build, monitor, and scale real-time data pipelines with confidence.

Release 95.2: quality-of-life improvements across Kpow, Flex & Helm deployments

95.2 focuses on refinement and operability, with improvements across the UI, consumer group workflows, and deployment configuration. Alongside bug fixes and usability improvements, this release adds new Helm options for configuring the API and controlling service account credential automounting.

Highlights

Release 95.2: quality-of-life improvements across Kpow, Flex & Helm deployments

95.2 focuses on refinement and operability, with improvements across the UI, consumer group workflows, and deployment configuration. Alongside bug fixes and usability improvements, this release adds new Helm options for configuring the API and controlling service account credential automounting.

Integrate Kpow with Oracle Compute Infrastructure (OCI) Streaming with Apache Kafka

Unlock the full potential of your dedicated OCI Streaming with Apache Kafka cluster. This guide shows you how to integrate Kpow with your OCI brokers and self-hosted Kafka Connect and Schema Registry, unifying them into a single, developer-ready toolkit for complete visibility and control over your entire Kafka ecosystem.

Unified community license for Kpow and Flex

The unified Factor House Community License works with both Kpow Community Edition and Flex Community Edition, meaning one license will unlock both products. This makes it even simpler to explore modern data streaming tools, create proof-of-concepts, and evaluate our products.

All Resources

Updates to container specifics (DockerHub and Helm Charts)

Discover how our 94.1 release has streamlined DockerHub, Helm Charts, and AWS Marketplace deployments!

A final goodbye to OperatrIO

2025 is a pivotal moment at Factor House (formally Operatr.IO). We've announced our fundraise and have much more to announce about our roadmap this year. This is why we think that now is the perfect time to do a bit of spring cleaning and retire the io.operatr artifacts for good.

Our Commitment to Engineers

With our funding announcement and the upcoming launch of the Factor Platform, we know some of our existing customers might be wondering: What does this mean for Kpow and Flex? Will we be forced to upgrade? Will prices spike? Keep one thing in mind - at Factor House we're here for engineers.

From Bootstrap to Blackbird: The Future of Factor House

We are thrilled to announce that Factor House has closed a $5M seed round to accelerate the commercial release of our new product, the Factor Platform. Led by Blackbird Ventures, with OIF Ventures, Flying Fox Ventures, and LaunchVic’s Alice Anderson Fund as partners, this round brings our five-year bootstrapping journey to a happy conclusion and points to a bright future ahead!

Factor House Product VPAT

We first published a VPAT in the release notes of Kpow for Apache Kafka v92.4, with a VPAT available to download in every release of Kpow since. Today, we are pleased to announce that we are extending that commitment to all future Factor House product releases - including Flex for Apache Flink and the Factor Platform.

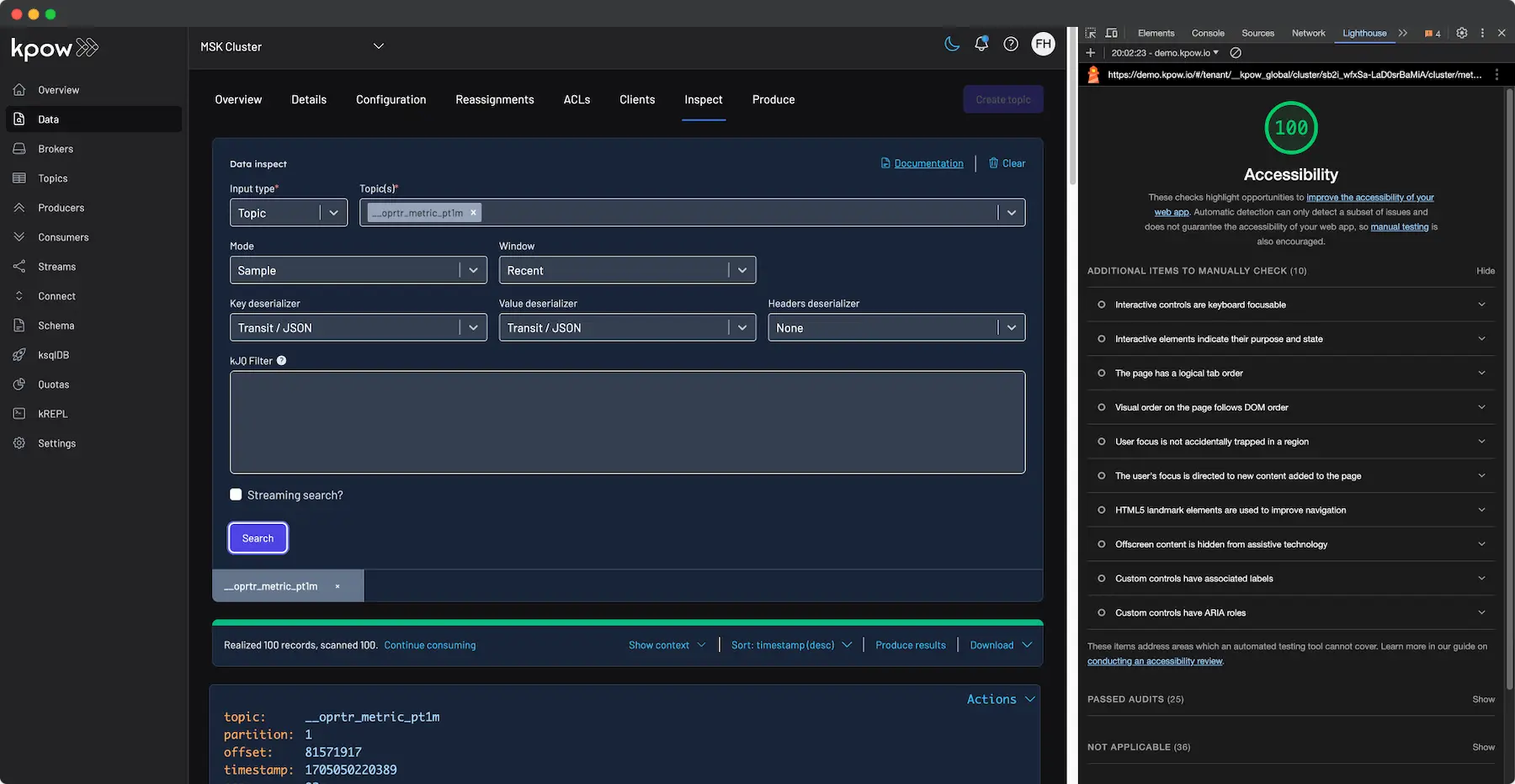

Web Accessibility at Factor House

How do skateboards and green suns drive web accessibility at Factor House? Learn why building accessible products is important to us, and how we've changed to ensure that accessibility is embedded in our development process.

Events & Webinars

Stay plugged in with the Factor House team and our community.

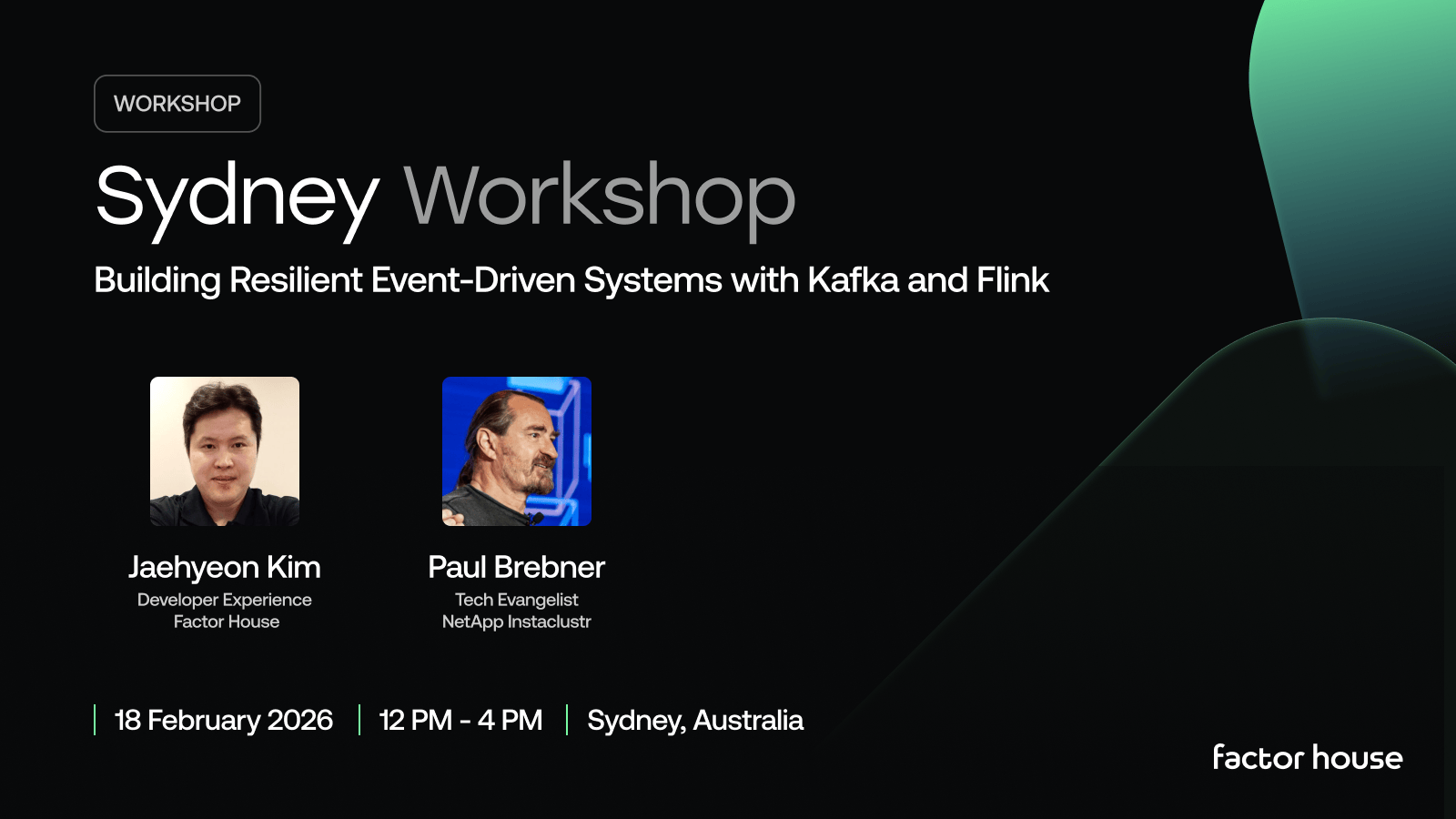

Sydney Workshop: Building Resilient Event-Driven Systems with Kafka and Flink

We're teaming up with NetApp Instaclustr and Ververica to run this intensive half-day workshop where you'll design, build, and operate a complete real-time operational system from the ground up.

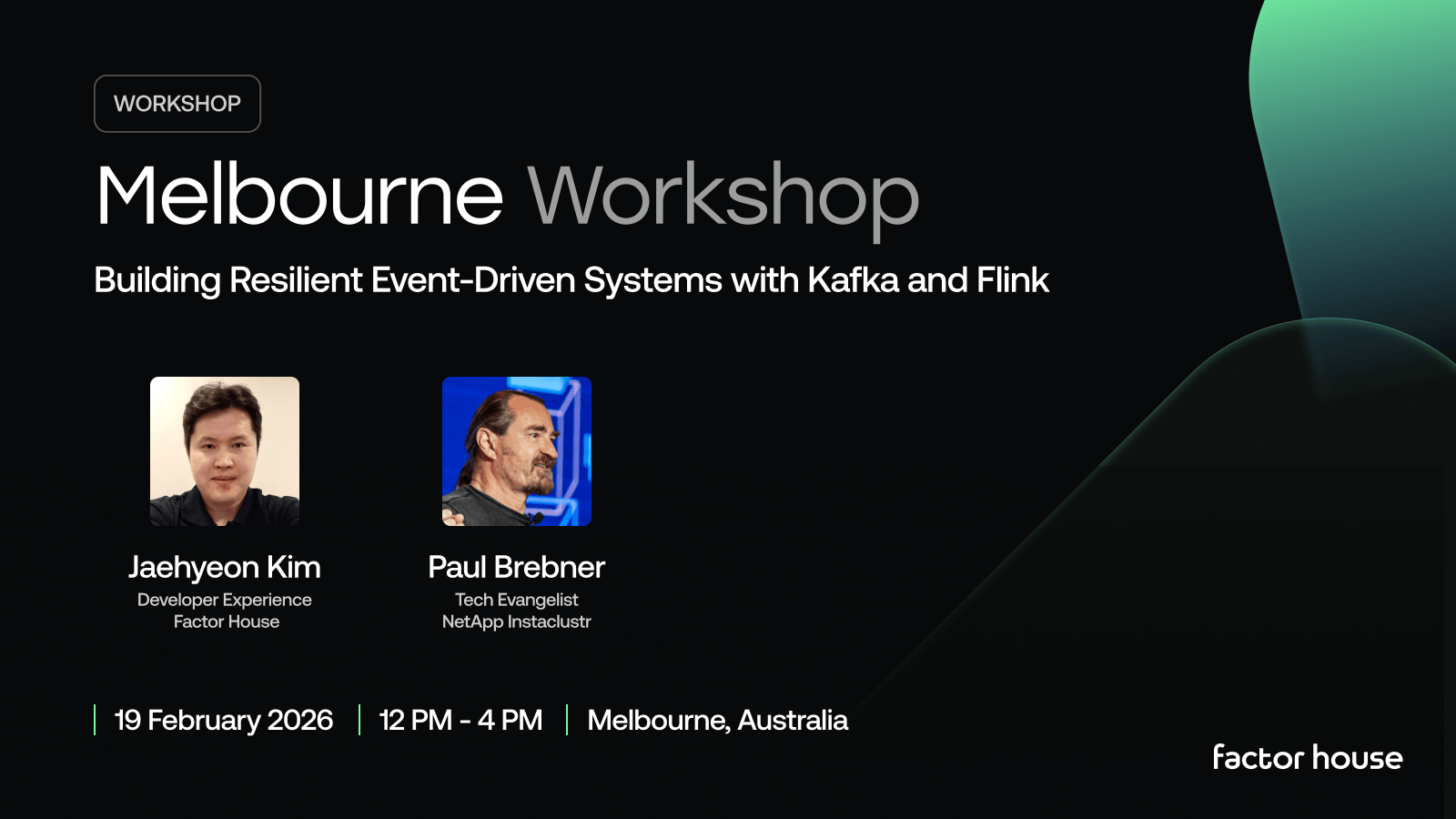

Melbourne Workshop: Building Resilient Event-Driven Systems with Kafka and Flink

We're teaming up with NetApp Instaclustr and Ververica to run this intensive half-day workshop where you'll design, build, and operate a complete real-time operational system from the ground up.

Join the Factor Community

We’re building more than products, we’re building a community. Whether you're getting started or pushing the limits of what's possible with Kafka and Flink, we invite you to connect, share, and learn with others.