Developer

Knowledge Center

Empowering engineers with everything they need to build, monitor, and scale real-time data pipelines with confidence.

Release 95.2: quality-of-life improvements across Kpow, Flex & Helm deployments

95.2 focuses on refinement and operability, with improvements across the UI, consumer group workflows, and deployment configuration. Alongside bug fixes and usability improvements, this release adds new Helm options for configuring the API and controlling service account credential automounting.

Highlights

Release 95.2: quality-of-life improvements across Kpow, Flex & Helm deployments

95.2 focuses on refinement and operability, with improvements across the UI, consumer group workflows, and deployment configuration. Alongside bug fixes and usability improvements, this release adds new Helm options for configuring the API and controlling service account credential automounting.

Integrate Kpow with Oracle Compute Infrastructure (OCI) Streaming with Apache Kafka

Unlock the full potential of your dedicated OCI Streaming with Apache Kafka cluster. This guide shows you how to integrate Kpow with your OCI brokers and self-hosted Kafka Connect and Schema Registry, unifying them into a single, developer-ready toolkit for complete visibility and control over your entire Kafka ecosystem.

Unified community license for Kpow and Flex

The unified Factor House Community License works with both Kpow Community Edition and Flex Community Edition, meaning one license will unlock both products. This makes it even simpler to explore modern data streaming tools, create proof-of-concepts, and evaluate our products.

All Resources

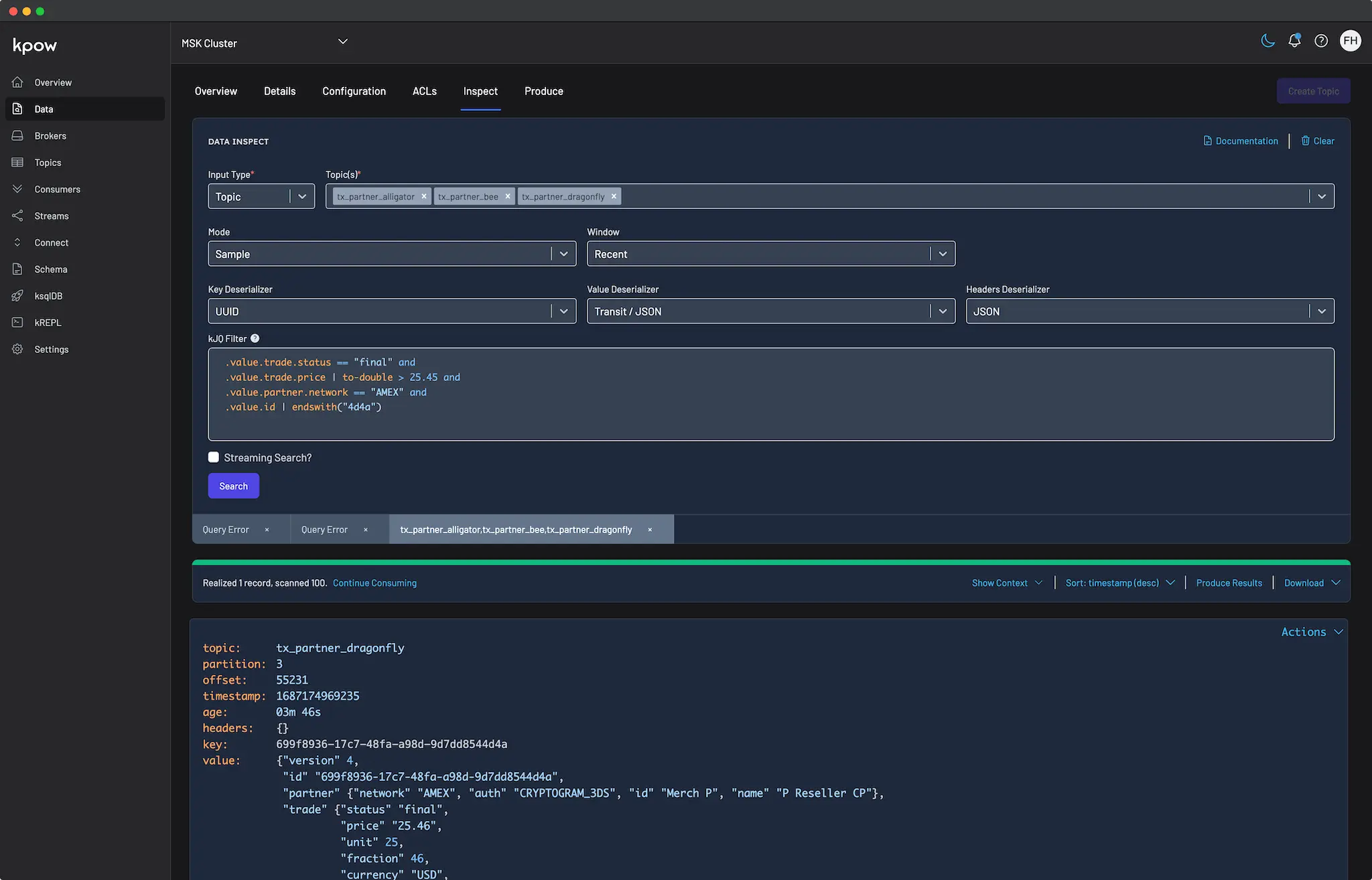

How to query a Kafka topic

Querying Kafka topics is a critical task for engineers working on data streaming applications, but it can often be a complex and time-consuming process. Enter Kpow's data inspect feature—designed to simplify and optimize Kafka topic queries, making it an essential tool for professionals working with Apache Kafka.

Release 93.4: Protobuf, Light Mode, and Community

This minor version release from Factor House improves protobuf rendering, sharpens light-mode, simplifies community edition setup, resolves a number of small bugs, and bumps Kafka client dependencies to v3.7.0.

Release 93.3: Confluent Schema References

Introducing full support for schema references in Confluent Schema Registry. With this new release you can now create and edit AVRO, JSONSchema, and Protobuf schema with references, as well as consume and produce messages with those schema.

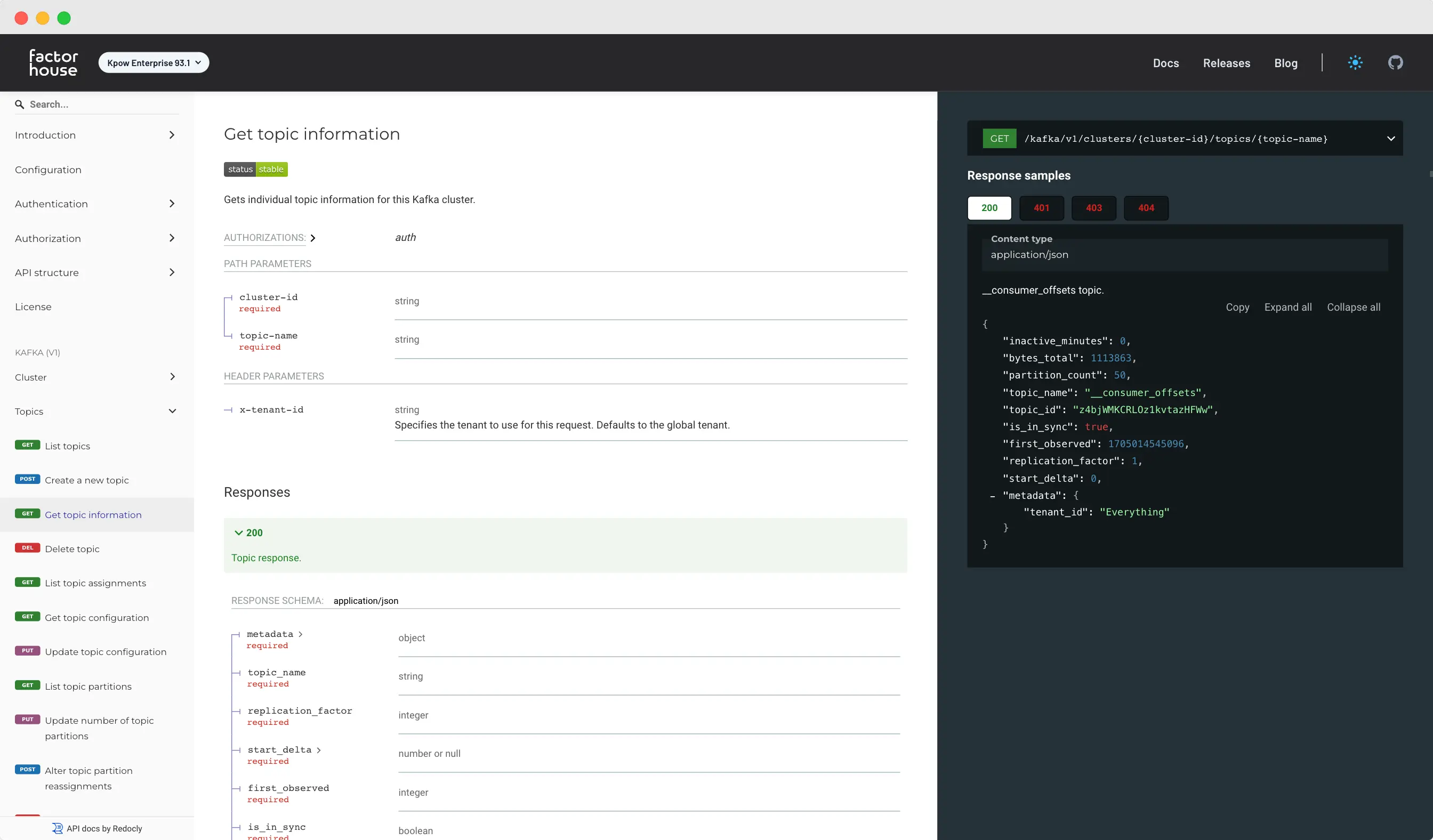

Introducing Kpow's new API

With our new API, you can now leverage Kpow's capabilities directly from your own tools and platforms, opening up a whole new range of possibilities for integrating Kpow into your existing workflows. Whether you're managing topics, consumer groups, or monitoring Kafka clusters, our API provides a seamless experience that mirrors the functionality of our user interface.

Release 93.2: Introducing Connector Auto-Restart

Kpow can now auto restart connectors when they fail. Read on to learn how to make connectors more reliable.

Release 92.4: Kpow WCAG 2.1 AA Accessibility Compliance

Our mission at Factor House is to empower every engineer in the streaming tech space with superb tooling. We are pleased to report that Kpow for Apache Kafka is now compliant with WCAG 2.1 AA accessibility guidelines and has an independently audited Voluntary Product Accessiblity Template (VPAT) report.

Events & Webinars

Stay plugged in with the Factor House team and our community.

Sydney Workshop: Building Resilient Event-Driven Systems with Kafka and Flink

We're teaming up with NetApp Instaclustr and Ververica to run this intensive half-day workshop where you'll design, build, and operate a complete real-time operational system from the ground up.

Melbourne Workshop: Building Resilient Event-Driven Systems with Kafka and Flink

We're teaming up with NetApp Instaclustr and Ververica to run this intensive half-day workshop where you'll design, build, and operate a complete real-time operational system from the ground up.

Join the Factor Community

We’re building more than products, we’re building a community. Whether you're getting started or pushing the limits of what's possible with Kafka and Flink, we invite you to connect, share, and learn with others.