Integrate Kpow with Oracle Compute Infrastructure (OCI) Streaming with Apache Kafka

Table of contents

Overview

When working with real-time data on Oracle Cloud Infrastructure (OCI), you have two powerful, Kafka-compatible streaming services to choose from:

- OCI Streaming with Apache Kafka: A dedicated, managed service that gives you full control over your own Apache Kafka cluster.

- OCI Streaming: A serverless, Kafka-compatible platform designed for effortless, scalable data ingestion.

Choosing the dedicated OCI Streaming with Apache Kafka service gives you maximum control and the complete functionality of open-source Kafka. However, this control comes with a trade-off: unlike some other managed platforms, OCI does not provide managed Kafka Connect or Schema Registry services, recommending users provision them on custom instances.

This guide will walk you through integrating Kpow with your OCI Kafka cluster, alongside self-hosted instances of Kafka Connect and Schema Registry. The result is a complete, developer-ready environment that provides full visibility and control over your entire Kafka ecosystem.

❗ Note on the serverless OCI Streaming service: While you can connect Kpow to OCI's serverless offering, its functionality is limited because some Kafka APIs are yet to be implemented. Our OCI provider documentation explains how to connect, and you can review the specific API gaps in the official Oracle documentation.

💡 Explore our setup guides for other leading platforms like Confluent Cloud, Amazon MSK, and Google Cloud Kafka, or emerging solutions like Redpanda, BufStream, and the Instaclustr Platform.

About Factor House

Factor House is a leader in real-time data tooling, empowering engineers with innovative solutions for Apache Kafka® and Apache Flink®.

Our flagship product, Kpow for Apache Kafka, is the market-leading enterprise solution for Kafka management and monitoring.

Start your free 30-day trial or explore our live multi-cluster demo environment to see Kpow in action.

.png)

Prerequisites

Before creating a Kafka cluster, you must set up the necessary network infrastructure within your OCI tenancy. The Kafka cluster itself is deployed directly into this network, and this setup is also what ensures that your client applications (like Kpow) can securely connect to the brokers.

As detailed in the official OCI documentation, you will need:

- A Virtual Cloud Network (VCN): The foundational network for your cloud resources.

- A Subnet: A subdivision of your VCN where you will launch the Kafka cluster and client VM.

- Security Rules: Ingress rules configured in a Security List or Network Security Group to allow traffic on the required ports. For this guide, which uses SASL/SCRAM, you must open port 9092. If you were using mTLS, you would open port 9093.

Create a Vault Secret

OCI Kafka leverages the OCI Vault service to securely manage the credentials used for SASL/SCRAM authentication.

First, create a Vault in your desired compartment. Inside that Vault, create a new Secret with the following JSON content, replacing the placeholder values with your desired username and a strong password.

{ "username": "<vault-username>", "password": "<value-password>" }Take note of the following details, as you will need them when creating the Kafka cluster:

- SASL SCRAM - Vault compartment:

<compartment-name> - SASL SCRAM - Vault:

<vault-name> - SASL SCRAM - Secret compartment:

<compartment-name> - SASL SCRAM - Secret:

<value-secret-name>

Create IAM Policies

To allow OCI to manage your Kafka cluster and its associated network resources, you must create several IAM policies. These policies grant permissions to both user groups (for administrative actions) and the Kafka service principal (for operational tasks).

The required policies are detailed in the "Required IAM Policies" section of the OCI Kafka documentation. Apply these policies in your tenancy's root compartment to ensure the Kafka service has the necessary permissions.

Create a Kafka Cluster

With the prerequisites in place, you can now create your Kafka cluster from the OCI console.

- Navigate to Developer Services > Application Integration > OCI Streaming with Apache Kafka.

- Click Create cluster and follow the wizard:

- Cluster settings: Provide a name, select your compartment, and choose a Kafka version (e.g., 3.7).

- Broker settings: Choose the number of brokers, the OCPU count per broker, and the block volume storage per broker.

- Cluster configuration: OCI creates a default configuration for the cluster. You can review and edit its properties here. For this guide, add

auto.create.topics.enable=trueto the default configuration. Note that after creation, the cluster's configuration can only be changed using the OCI CLI or SDK. - Security settings: This section is for configuring Mutual TLS (mTLS). Since this guide uses SASL/SCRAM, leave this section blank. We will configure security after the cluster is created.

- Networking: Choose the VCN and subnet you configured in the prerequisites.

- Review your settings and click Create. OCI will begin provisioning your dedicated Kafka cluster.

- Once the cluster's status becomes Active, select it from the cluster list page to view its details.

- From the details page, select the Actions menu and then select Update SASL SCRAM.

- In the Update SASL SCRAM panel, select the Vault and the Secret that contain your secure credentials.

- Select Update.

- After the update is complete, return to the Cluster Information section and copy the Bootstrap Servers endpoint for SASL-SCRAM. You will need this for the next steps.

Launch a Client VM

We need a virtual machine to host Kpow, Kafka Connect, and Schema Registry. This VM must have network access to the Kafka cluster.

- Create Instance & Save SSH Key: Navigate to Compute > Instances and begin to create a new compute instance.

- Select an Ubuntu image.

- In the "Add SSH keys" section, choose the option to "Generate a key pair for me" and click the "Save Private Key" button. This is your only chance to download this key, which is required for SSH access.

- Configure Networking: During the instance creation, configure the networking as follows:

- Placement: Assign the instance to the same VCN as your Kafka cluster, in a subnet that can reach your Kafka brokers.

- Kpow UI Access: Ensure the subnet's security rules allow inbound TCP traffic on port 3000. This opens the port for the Kpow web interface.

- Internet Access: The instance needs outbound access to pull the Kpow Docker image.

- Simple Setup: For development, place the instance in a public subnet with an Internet Gateway.

- Secure (Production): We recommend using a private subnet with a NAT Gateway. This allows outbound connections without exposing the instance to inbound internet traffic.

- Connect and Install Docker: Once the VM is in the "Running" state, use the private key you saved to SSH into its public or private IP address and install Docker.

Deploying Kpow with Supporting Instances

On your client VM, we will use Docker Compose to launch Kpow, Kafka Connect, and Schema Registry.

First, create a setup script to prepare the environment. This script downloads the MSK Data Generator (a useful source connector for creating sample data) and sets up the JAAS configuration files required for Schema Registry's basic authentication.

Save the following as setup.sh:

#!/usr/bin/env bash

SCRIPT_PATH="$(cd $(dirname "$0"); pwd)"

DEPS_PATH=$SCRIPT_PATH/deps

rm -rf $DEPS_PATH && mkdir $DEPS_PATH

echo "Set-up environment..."

echo "Downloading MSK data generator..."

mkdir -p $DEPS_PATH/connector/msk-datagen

curl --silent -L -o $DEPS_PATH/connector/msk-datagen/msk-data-generator.jar \

https://github.com/awslabs/amazon-msk-data-generator/releases/download/v0.4.0/msk-data-generator-0.4-jar-with-dependencies.jar

echo "Create Schema Registry configs..."

mkdir -p $DEPS_PATH/schema

cat << 'EOF' > $DEPS_PATH/schema/schema_jaas.conf

schema {

org.eclipse.jetty.jaas.spi.PropertyFileLoginModule required

debug="true"

file="/etc/schema/schema_realm.properties";

};

EOF

cat << 'EOF' > $DEPS_PATH/schema/schema_realm.properties

admin: CRYPT:adpexzg3FUZAk,schema-admin

EOF

echo "Environment setup completed."Next, create a `docker-compose.yml` file. This defines our three services. Be sure to replace the placeholder values (<BOOTSTRAP_SERVER_ADDRESS>, <VAULT_USERNAME>, <VAULT_PASSWORD>) with your specific OCI Kafka details.

services:

kpow:

image: factorhouse/kpow-ce:latest

container_name: kpow

pull_policy: always

restart: always

ports:

- "3000:3000"

networks:

- factorhouse

depends_on:

connect:

condition: service_healthy

environment:

ENVIRONMENT_NAME: "OCI Kafka Cluster"

BOOTSTRAP: "<BOOTSTRAP_SERVER_ADDRESS>"

SECURITY_PROTOCOL: "SASL_SSL"

SASL_MECHANISM: "SCRAM-SHA-512"

SASL_JAAS_CONFIG: 'org.apache.kafka.common.security.scram.ScramLoginModule required username="<VAULT_USERNAME>" password="<VAULT_PASSWORD>";'

CONNECT_NAME: "Local Connect Cluster"

CONNECT_REST_URL: "http://connect:8083"

SCHEMA_REGISTRY_NAME: "Local Schema Registry"

SCHEMA_REGISTRY_URL: "http://schema:8081"

SCHEMA_REGISTRY_AUTH: "USER_INFO"

SCHEMA_REGISTRY_USER: "admin"

SCHEMA_REGISTRY_PASSWORD: "admin"

env_file:

- license.env

schema:

image: confluentinc/cp-schema-registry:7.8.0

container_name: schema_registry

ports:

- "8081:8081"

networks:

- factorhouse

environment:

SCHEMA_REGISTRY_HOST_NAME: "schema"

SCHEMA_REGISTRY_KAFKASTORE_BOOTSTRAP_SERVERS: "<BOOTSTRAP_SERVER_ADDRESS>"

## Authentication

SCHEMA_REGISTRY_KAFKASTORE_SECURITY_PROTOCOL: "SASL_SSL"

SCHEMA_REGISTRY_KAFKASTORE_SASL_MECHANISM: "SCRAM-SHA-512"

SCHEMA_REGISTRY_KAFKASTORE_SASL_JAAS_CONFIG: 'org.apache.kafka.common.security.scram.ScramLoginModule required username="<VAULT_USERNAME>" password="<VAULT_PASSWORD>";'

SCHEMA_REGISTRY_AUTHENTICATION_METHOD: BASIC

SCHEMA_REGISTRY_AUTHENTICATION_REALM: schema

SCHEMA_REGISTRY_AUTHENTICATION_ROLES: schema-admin

SCHEMA_REGISTRY_OPTS: -Djava.security.auth.login.config=/etc/schema/schema_jaas.conf

volumes:

- ./deps/schema:/etc/schema

connect:

image: confluentinc/cp-kafka-connect:7.8.0

container_name: connect

restart: unless-stopped

ports:

- 8083:8083

networks:

- factorhouse

environment:

CONNECT_BOOTSTRAP_SERVERS: "<BOOTSTRAP_SERVER_ADDRESS>"

CONNECT_REST_PORT: "8083"

CONNECT_GROUP_ID: "oci-demo-connect"

CONNECT_CONFIG_STORAGE_TOPIC: "oci-demo-connect-config"

CONNECT_OFFSET_STORAGE_TOPIC: "oci-demo-connect-offsets"

CONNECT_STATUS_STORAGE_TOPIC: "oci-demo-connect-status"

## Authentication

CONNECT_SECURITY_PROTOCOL: "SASL_SSL"

CONNECT_SASL_MECHANISM: "SCRAM-SHA-512"

CONNECT_SASL_JAAS_CONFIG: 'org.apache.kafka.common.security.scram.ScramLoginModule required username="<VAULT_USERNAME>" password="<VAULT_PASSWORD>";'

# Propagate auth settings to internal clients

CONNECT_PRODUCER_SECURITY_PROTOCOL: "SASL_SSL"

CONNECT_PRODUCER_SASL_MECHANISM: "SCRAM-SHA-512"

CONNECT_PRODUCER_SASL_JAAS_CONFIG: 'org.apache.kafka.common.security.scram.ScramLoginModule required username="<VAULT_USERNAME>" password="<VAULT_PASSWORD>";'

CONNECT_CONSUMER_SECURITY_PROTOCOL: "SASL_SSL"

CONNECT_CONSUMER_SASL_MECHANISM: "SCRAM-SHA-512"

CONNECT_CONSUMER_SASL_JAAS_CONFIG: 'org.apache.kafka.common.security.scram.ScramLoginModule required username="<VAULT_USERNAME>" password="<VAULT_PASSWORD>";'

CONNECT_KEY_CONVERTER: "org.apache.kafka.connect.json.JsonConverter"

CONNECT_VALUE_CONVERTER: "org.apache.kafka.connect.json.JsonConverter"

CONNECT_INTERNAL_KEY_CONVERTER: "org.apache.kafka.connect.json.JsonConverter"

CONNECT_INTERNAL_VALUE_CONVERTER: "org.apache.kafka.connect.json.JsonConverter"

CONNECT_REST_ADVERTISED_HOST_NAME: "localhost"

CONNECT_LOG4J_ROOT_LOGLEVEL: "INFO"

CONNECT_PLUGIN_PATH: /usr/share/java/,/etc/kafka-connect/jars

volumes:

- ./deps/connector:/etc/kafka-connect/jars

healthcheck:

test: ["CMD-SHELL", "curl -f http://localhost:8083/ || exit 1"]

interval: 5s

timeout: 3s

retries: 10

start_period: 20s

networks:

factorhouse:

name: factorhouseFinally, create a license.env file with your Kpow license details. Then, run the setup script and launch the services:

chmod +x setup.sh

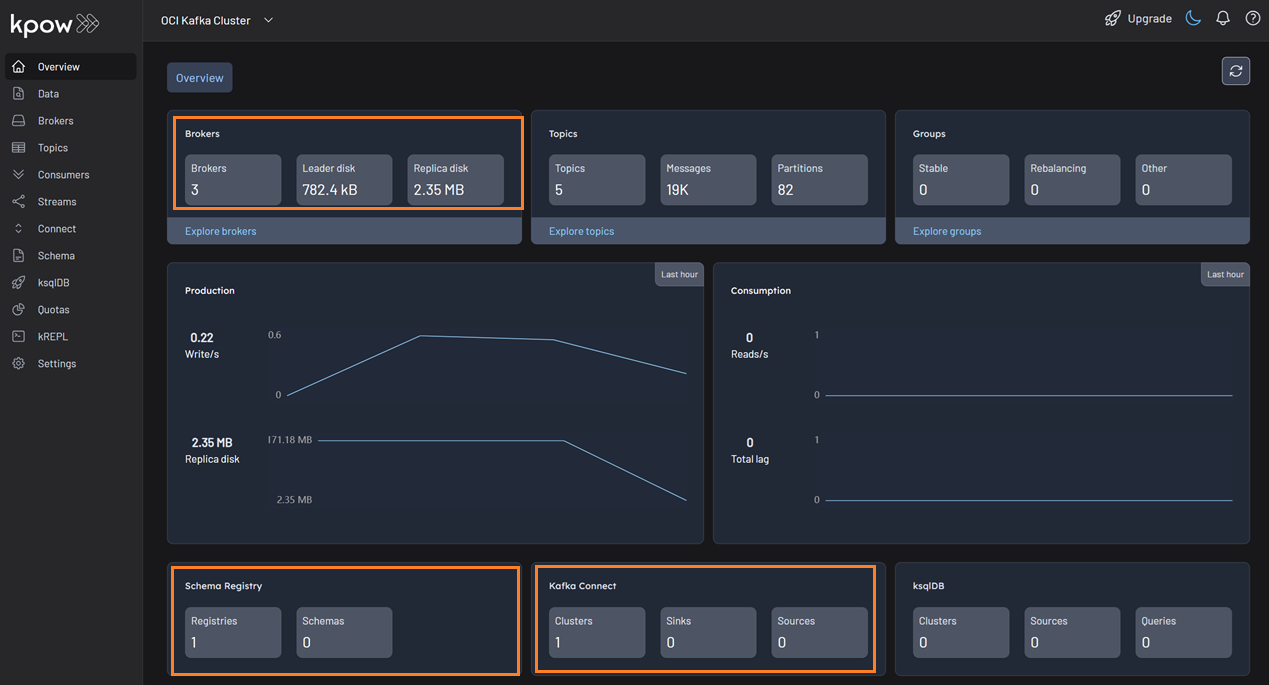

bash setup.sh && docker-compose up -dKpow will now be accessible at http://<vm-ip-address>:3000. You will see an overview of your OCI Kafka cluster, including your self-hosted Kafka Connect and Schema Registry instances.

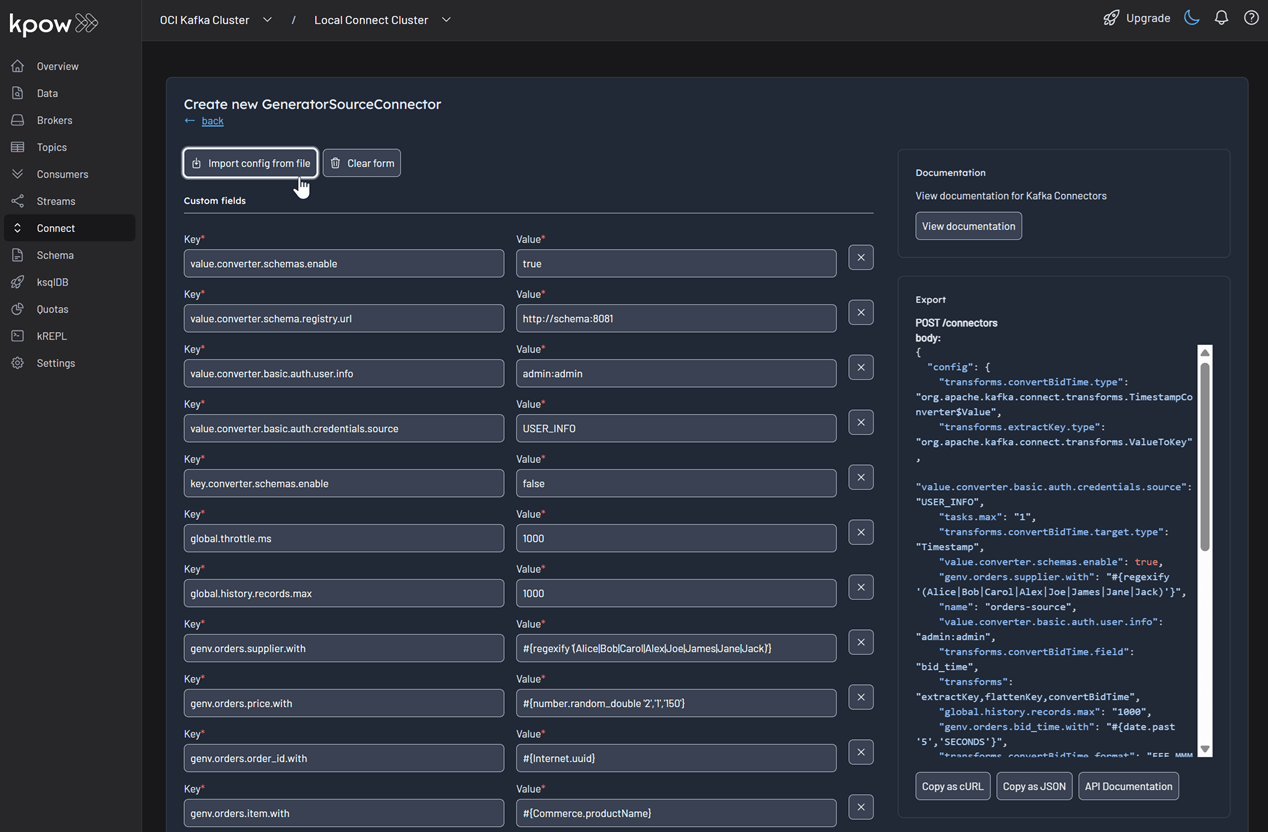

Deploy Kafka Connector

Now let's deploy a connector to generate some data.

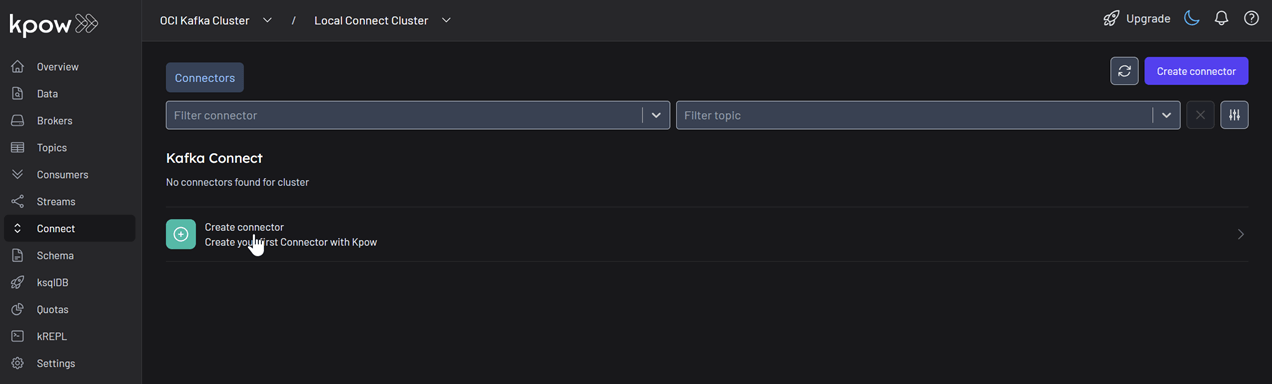

In the Connect menu of the Kpow UI, click the Create connector button.

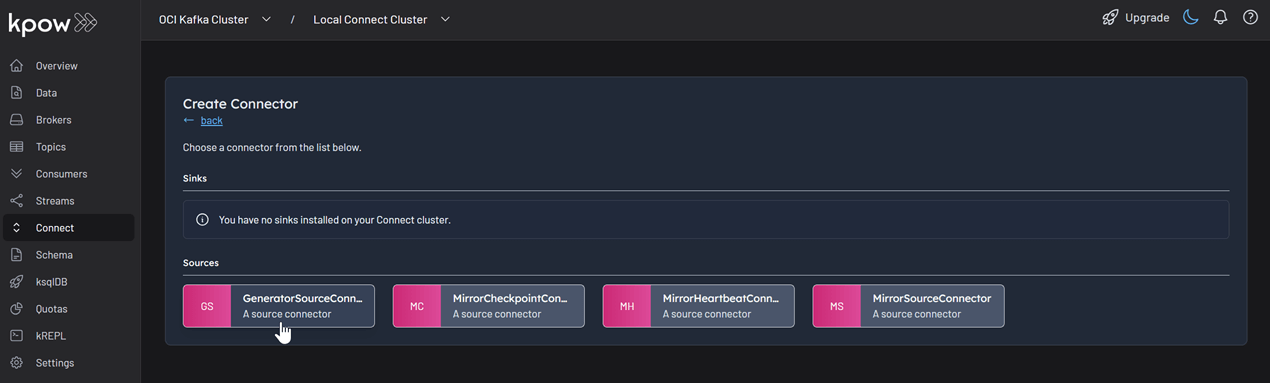

Among the available connectors, select GenerateSourceConnector, which is the source connector that generates fake order records.

Save the following configuration to a Json file, then import it and click Create. This configuration tells the connector to generate order data, use Avro for the value, and apply several Single Message Transforms (SMTs) to shape the final message.

{

"name": "orders-source",

"config": {

"connector.class": "com.amazonaws.mskdatagen.GeneratorSourceConnector",

"tasks.max": "1",

"key.converter": "org.apache.kafka.connect.storage.StringConverter",

"key.converter.schemas.enable": false,

"value.converter": "io.confluent.connect.avro.AvroConverter",

"value.converter.schemas.enable": true,

"value.converter.schema.registry.url": "http://schema:8081",

"value.converter.basic.auth.credentials.source": "USER_INFO",

"value.converter.basic.auth.user.info": "admin:admin",

"genv.orders.order_id.with": "#{Internet.uuid}",

"genv.orders.bid_time.with": "#{date.past '5','SECONDS'}",

"genv.orders.price.with": "#{number.random_double '2','1','150'}",

"genv.orders.item.with": "#{Commerce.productName}",

"genv.orders.supplier.with": "#{regexify '(Alice|Bob|Carol|Alex|Joe|James|Jane|Jack)'}",

"transforms": "extractKey,flattenKey,convertBidTime",

"transforms.extractKey.type": "org.apache.kafka.connect.transforms.ValueToKey",

"transforms.extractKey.fields": "order_id",

"transforms.flattenKey.type": "org.apache.kafka.connect.transforms.ExtractField$Key",

"transforms.flattenKey.field": "order_id",

"transforms.convertBidTime.type": "org.apache.kafka.connect.transforms.TimestampConverter$Value",

"transforms.convertBidTime.field": "bid_time",

"transforms.convertBidTime.target.type": "Timestamp",

"transforms.convertBidTime.format": "EEE MMM dd HH:mm:ss zzz yyyy",

"global.throttle.ms": "1000",

"global.history.records.max": "1000"

}

}

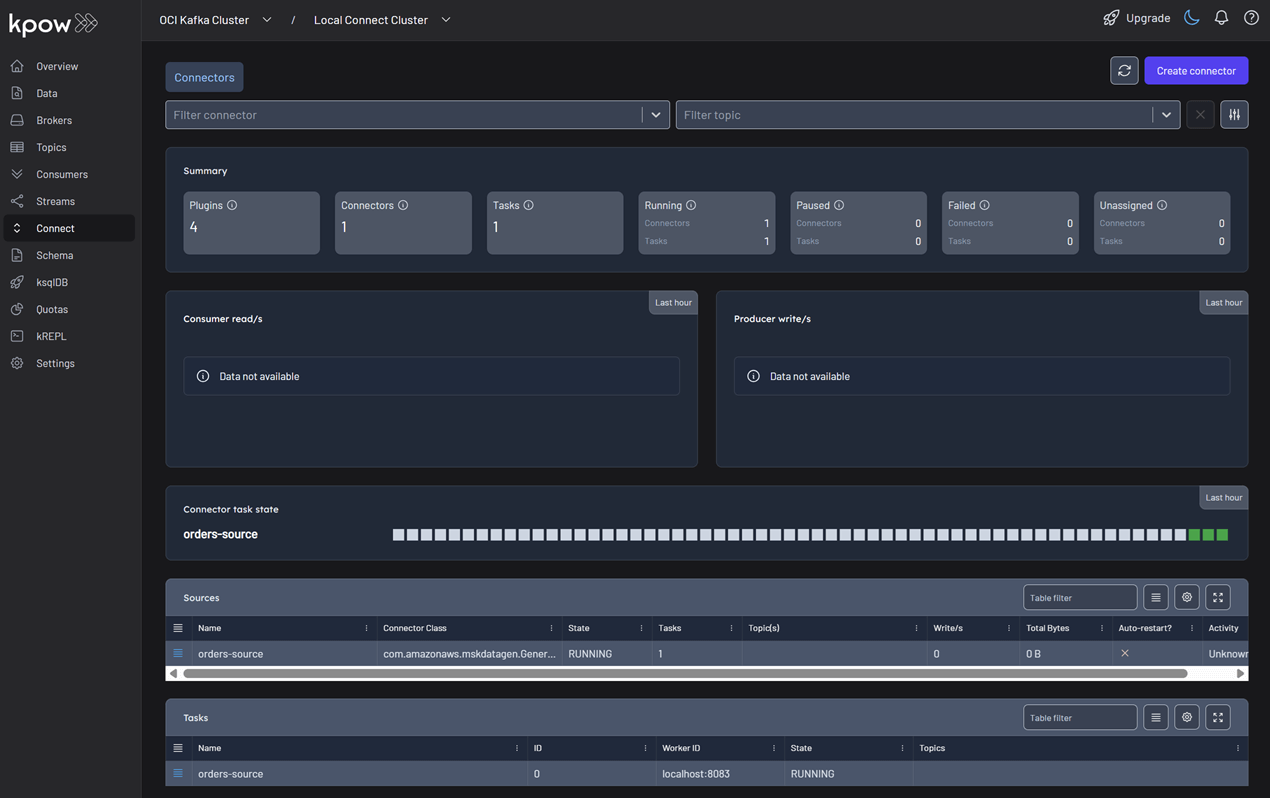

Once deployed, you can see the running connector and its task in the Kpow UI.

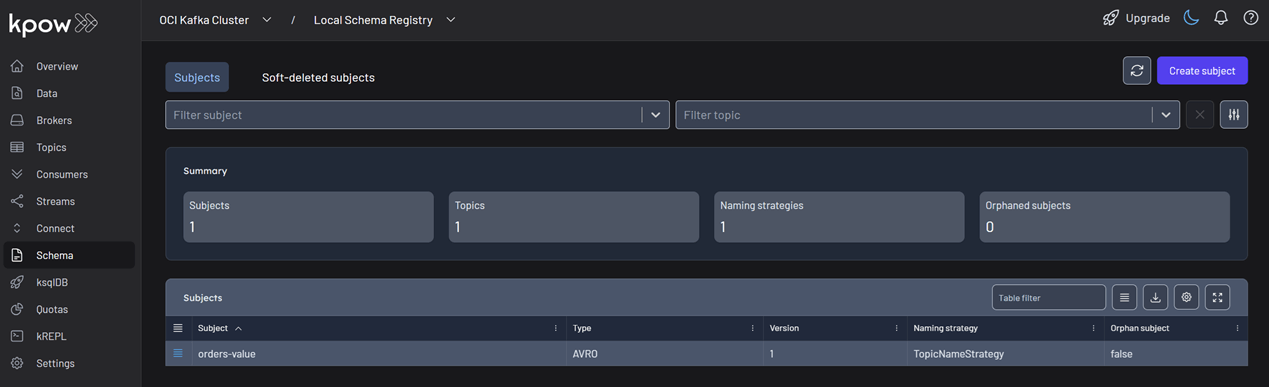

In the Schema menu, you can verify that a new value schema (orders-value) has been registered for the orders topic.

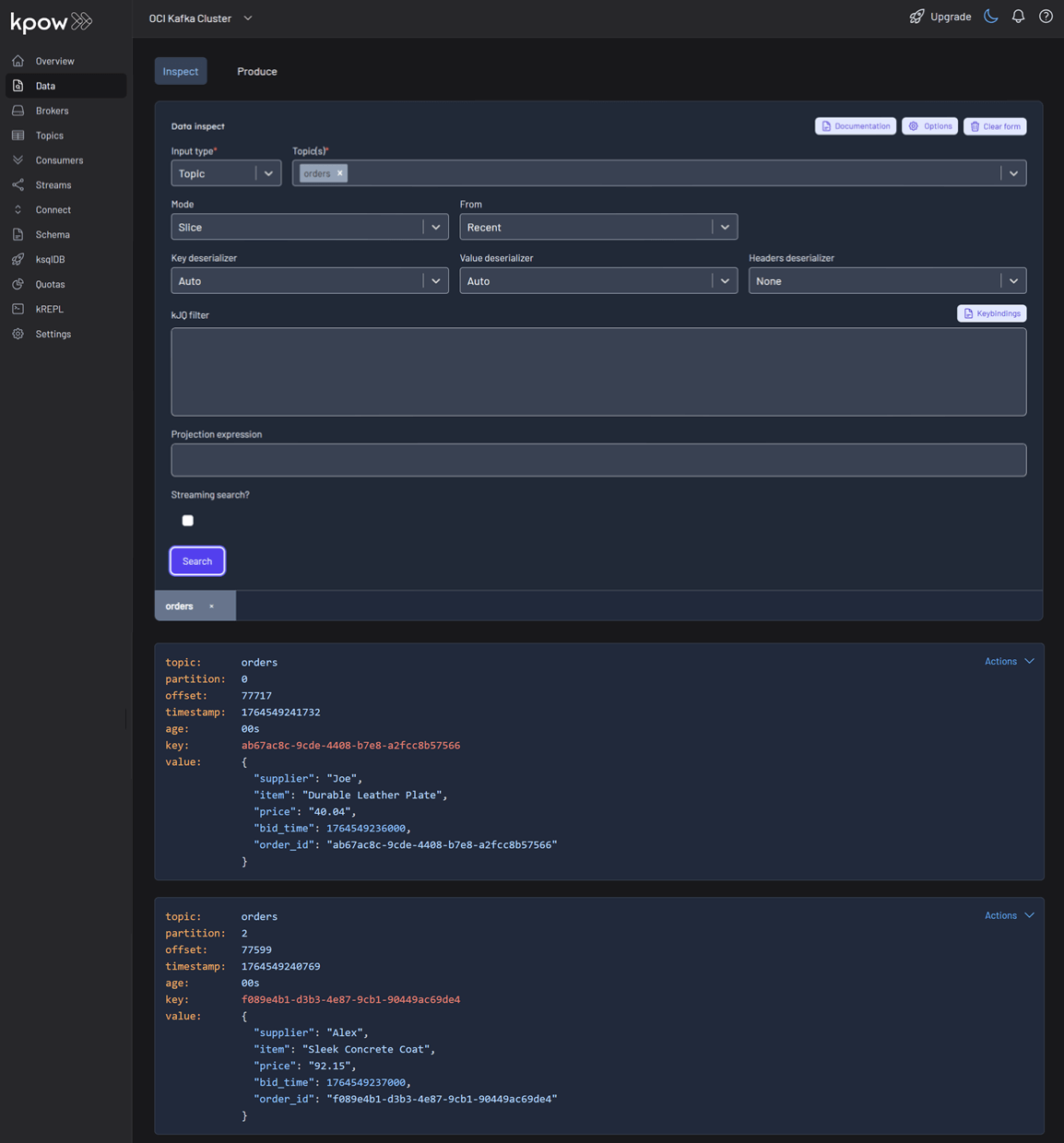

Finally, navigate to Data > Inspect, select the orders topic, and click Search to see the streaming data produced by your new connector.

Conclusion

You have now successfully integrated Kpow with OCI Streaming with Apache Kafka, providing a complete, self-hosted streaming stack on Oracle's powerful cloud infrastructure. By deploying Kafka Connect and Schema Registry alongside your cluster, you have a fully-featured, production-ready environment.

With Kpow, you have gained end-to-end visibility and control, from monitoring broker health and consumer lag to managing schemas, connectors, and inspecting live data streams. This empowers your team to develop, debug, and operate your Kafka-based applications with confidence.