Release 94.3: BYO AI, Topic data inference, and Data inspect improvements

Release Info

Kpow can be found on Dockerhub

docker pull factorhouse/kpow:94.3View our Docker quick start guide for help getting started.

Kpow can be found on ArtifactHub

Helm version: 1.0.66

helm repo add factorhouse https://charts.factorhouse.iohelm repo updatehelm install --namespace factorhouse --create-namespace my-kpow factorhouse/kpow --version 1.0.66 \--set env.LICENSE_ID="00000000-0000-0000-0000-000000000001" \--set env.LICENSE_CODE="KPOW_CREDIT" \--set env.LICENSEE="Your Corp\, Inc." \--set env.LICENSE_EXPIRY="2024-01-01" \--set env.LICENSE_SIGNATURE="638......A51" \--set env.BOOTSTRAP="127.0.0.1:9092\,127.0.0.1:9093\,127.0.0.1:9094" \--set env.SECURITY_PROTOCOL="SASL_PLAINTEXT" \--set env.SASL_MECHANISM="PLAIN" \--set env.SASL_JAAS_CONFIG="org.apache.kafka.common.security.plain.PlainLoginModule required username=\"user\" password=\"secret\";"--set env.LICENSE_CREDITS="7"

View our Helm instructions for help getting started.

Kpow can be found on the AWS Marketplace

View our AWS Marketplace documentation for help getting started.

Kpow can be downloaded and installed as a Java JAR file. This JAR is compatible with Java versions 17+.

View our JAR quick start guide for help getting started.

Kpow can be downloaded and installed as a Java JAR file. This JAR is compatible with Java versions 11+.

View our JAR quick start guide for help getting started.

Kpow can be downloaded and installed as a Java JAR file. This JAR is compatible with Java 8.

View our JAR quick start guide for help getting started.

For more information, read the Kpow accessibility documentation.

This minor release from Factor House introduces BYO AI model support, topic data inference, and major enhancements to data inspect—such as AI-powered filtering, new UI elements, and AVRO date formatting. It also adds integration with GCP Managed Kafka Schema Registry, improved webhook support, updated policy handling, and several UI and error-handling improvements.

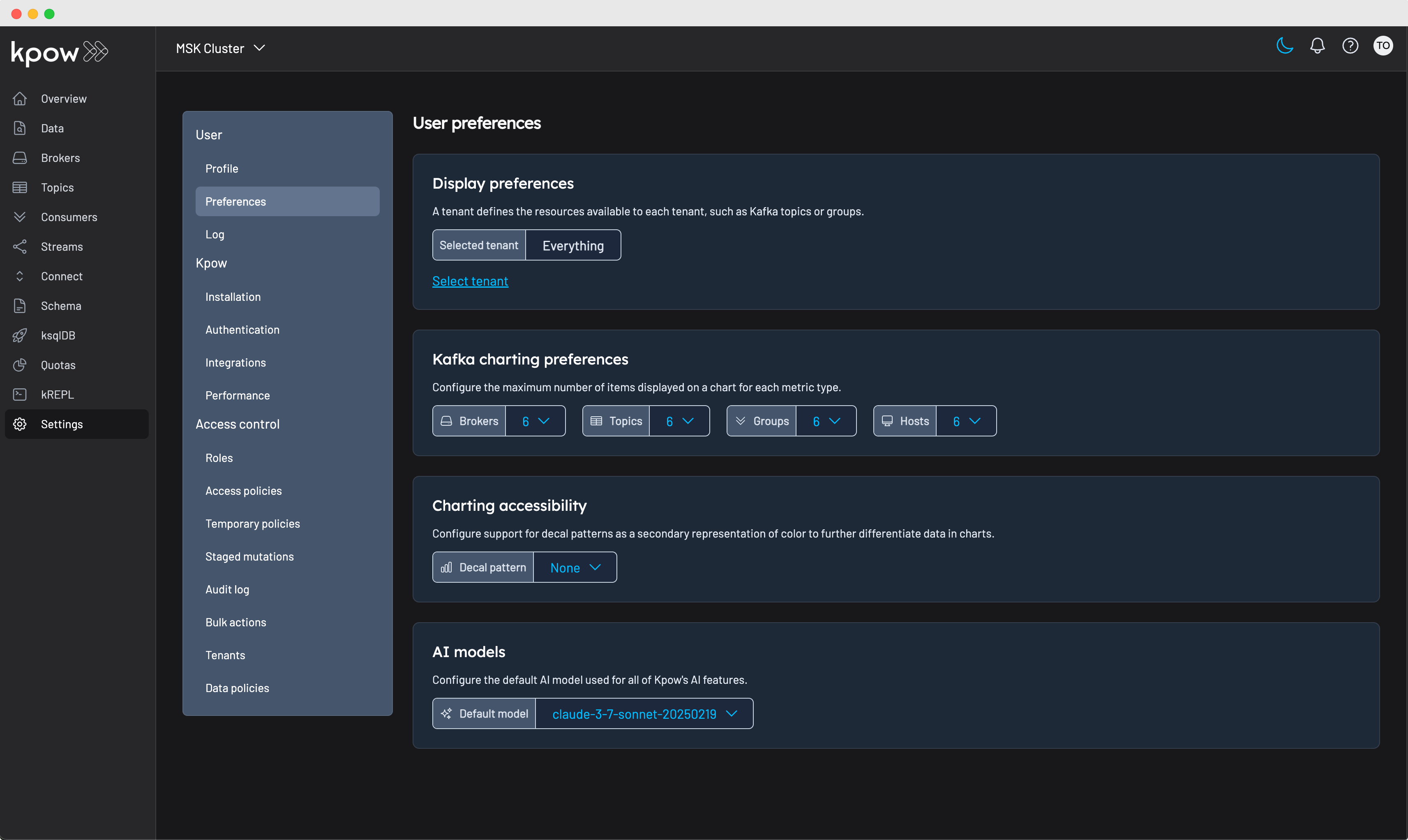

BYO AI

Kpow now offers optional integrations with popular AI models, from Ollama to enterprise solutions like Azure OpenAI. These features are entirely opt-in: unless you configure an AI provider, Kpow will not expose any AI functionality.

As of release 94.3, Kpow supports integrations with:

- Azure OpenAI

- AWS Bedrock

- OpenAI

- Anthropic

- Ollama

You can configure one or more AI models, and set a default model in your user preferences for use with Kpow’s AI-powered features.

These AI models power all AI-powered features in Kpow. Read the documentation for more details.

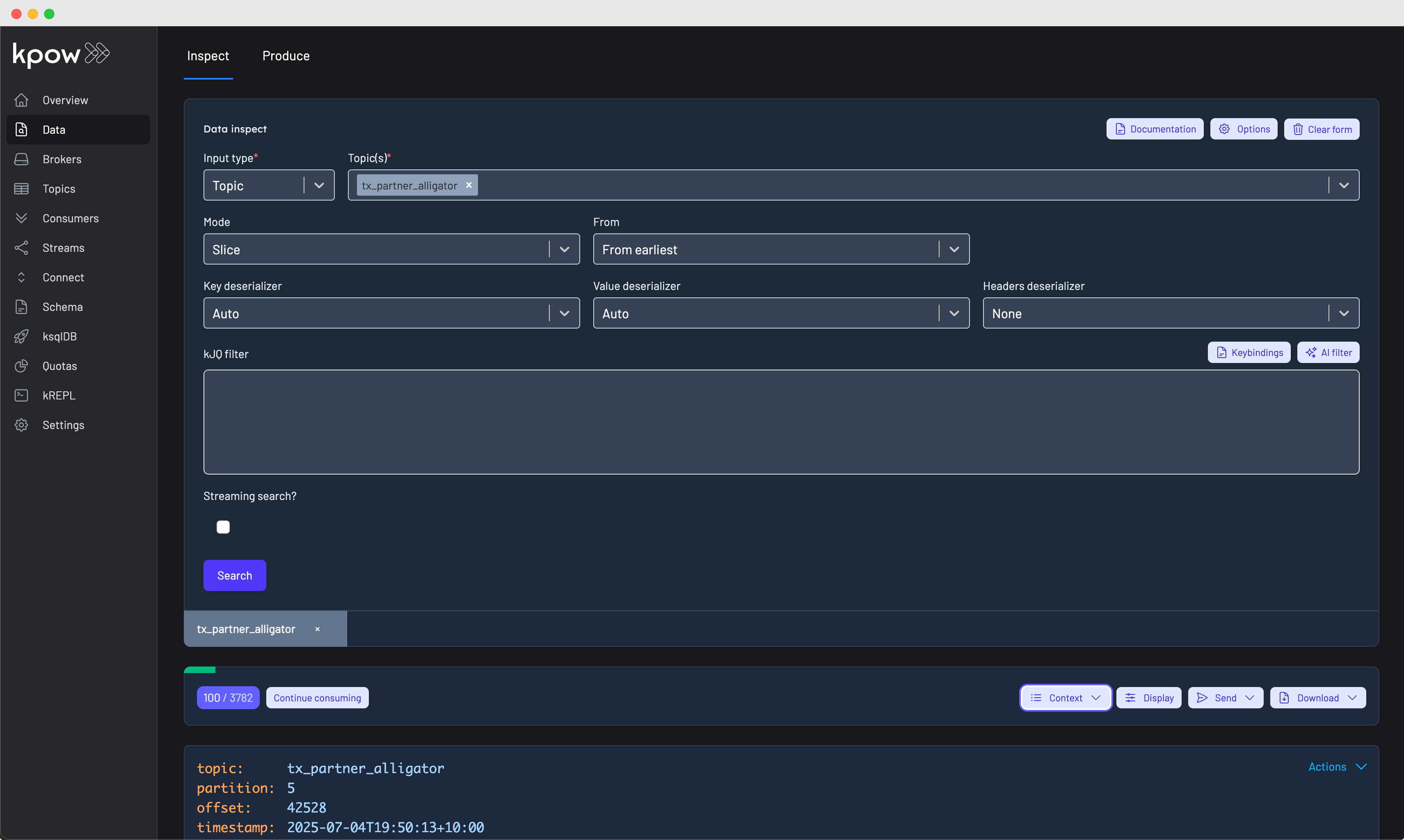

kJQ filter generation

Transform natural language queries into powerful kJQ filters with AI-assisted query generation. This feature empowers users of all technical backgrounds to extract insights from Kafka topics without requiring deep JQ programming knowledge.

How it works

Simply describe what you're looking for in plain English, and the AI model generates a syntactically correct kJQ filter tailored to your data. The system leverages:

- Natural language processing: Convert conversational prompts like "show me all orders over $100 from the last hour" into precise kJQ expressions.

- Schema-aware generation: When topic schemas are available, the AI optionally incorporates field names, data types, and structure to create more accurate filters.

- Validation integration: Generated filters are automatically validated against Kpow's kJQ engine to ensure syntactic correctness before execution.

Usage

Navigate to any topic's Data Inspect view and select the AI Filter option. Enter your query in natural language, and Kpow will generate the corresponding kJQ filter. You can then execute, modify, or save the generated filter for future use.

The AI filter generator works best when provided with specific, actionable descriptions of the data you want to find. Include field names, value ranges, example data and logical operators in your natural language query for optimal results.

Auto deserializers

Within Kpow's Data Inspect UI, you can specify Auto as the key or value deserializer and Kpow will attempt to infer the data format and deserialize the records it consumes.

Auto SerDes provides immediate data inspection capabilities without requiring prior knowledge of topic serialization formats. This feature is particularly valuable when:

- Exploring unfamiliar topics for the first time

- Working with multiple topics that may contain mixed data formats

- Debugging serialization issues across different environments

- Onboarding new team members who need quick topic insights

The Auto SerDes option appears alongside manually configurable deserializers like JSON, Avro, String, and custom SerDes in the Data Inspect interface.

When selected, Kpow analyzes each topic and applies the most appropriate deserializer automatically.

Topic inference observation

To persist and query inferred topic information—such as key deserializer, value deserializer, and schema registry ID—in Kpow’s UI, enable the Topic SerDes Observation job by setting:

INFER_TOPIC_SERDES=true

kJQ language improvements

In response to our customers' evolving filtering needs, we've significantly improved the kJQ language to make Kafka record filtering more powerful and flexible. Check out the updated kJQ filters documentation for full details.

Below are some highlights of the improvements:

Chained alternatives

Selects the first non-null email address and checks if it ends with ".com":

.value.primary_email // .value.secondary_email // .value.contact_email | endswith(".com")String/Array slices

Matches where the first 3 characters of transaction_id equal TXN:

.value.transaction_id[0:3] == "TXN"For example, { "transaction_id": "TXN12345" } matches, while { "transaction_id": "ORD12345" } does not

UUID type support

kJQ supports UUID types out of the box, including the UUID deserializer, AVRO + logical types, or Transit / JSON and EDN deserializers that have richer data types.

To compare against literal UUID strings, prefix them with #uuid to coerce into a UUID:

.key == #uuid "fc1ba6a8-6d77-46a0-b9cf-277b6d355fa6"Data inspect improvements

UI overhaul

We've enhanced the data inspect UI with smaller toolbar buttons and reorganized dropdowns to accommodate the new features detailed below.

Event log

All queries display the 'Event log' when anomalies occur during search, such as data policy applications, deserialization errors, or record processing exceptions. Each event includes a timestamp and a severity level (INFO, WARN, or ERROR).

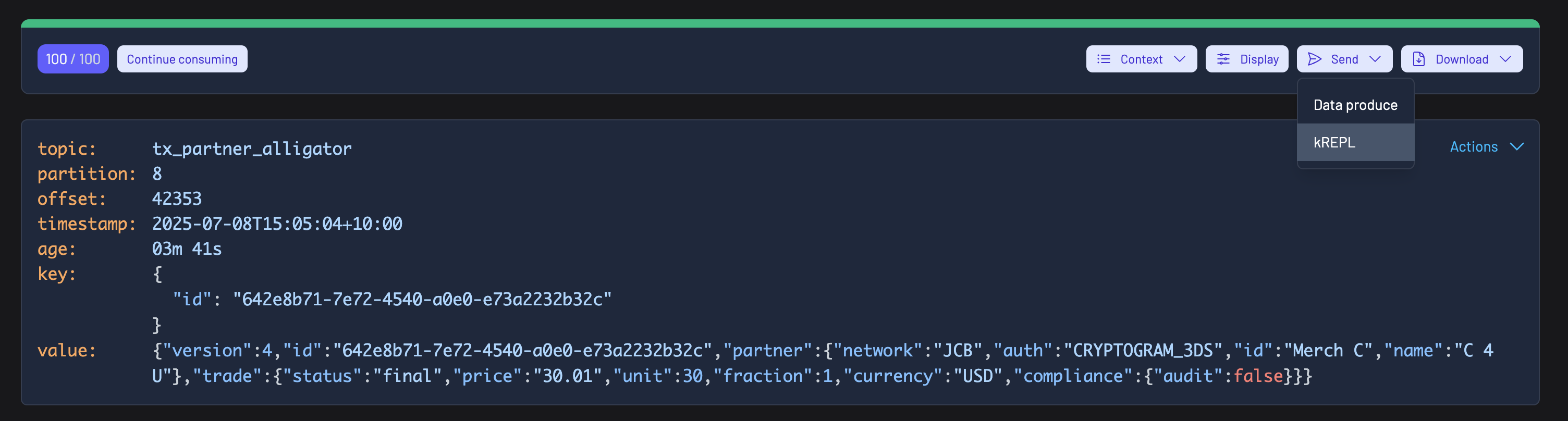

Send to kREPL

Customers can now send data inspect queries directly to the kREPL, our programmatic Kafka interface designed for power users. The kREPL enables notebook-style data transformations—such as aggregations and groupings—on consumed data.

You can access this feature through the 'Send' dropdown in the result metadata toolbar. Learn more about the kREPL by visiting our documentation.

GCP managed Kafka schema registry integration

Kpow now supports the Google Schema Registry as a varient of Confluent-compatible schema registry. Here is an example configuration.

ENVIRONMENT_NAME=GCP Kafka Cluster

BOOTSTRAP=bootstrap.<cluster-id>.<gcp-region>.managedkafka.<gcp-project-id>.cloud.goog:9092

SECURITY_PROTOCOL=SASL_SSL

SASL_MECHANISM=OAUTHBEARER

SASL_LOGIN_CALLBACK_HANDLER_CLASS=com.google.cloud.hosted.kafka.auth.GcpLoginCallbackHandler

SASL_JAAS_CONFIG=org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginModule required;

SCHEMA_REGISTRY_NAME=GCP Schema Registry

SCHEMA_REGISTRY_URL=https://managedkafka.googleapis.com/v1/projects/<gcp-project-id>/locations/<gcp-region>/schemaRegistries/<registry-id>

SCHEMA_REGISTRY_BEARER_AUTH_CUSTOM_PROVIDER_CLASS=com.google.cloud.hosted.kafka.auth.GcpBearerAuthCredentialProvider

SCHEMA_REGISTRY_BEARER_AUTH_CREDENTIALS_SOURCE=CUSTOMAdditional webhooks

We now support both Microsoft Teams and a generic HTTP webhook call. When configured, Kpow can send Data governance (Audit log) records to your Slack, all you need to do is configure a webhook.

Temporary policy improvements

Administrators can now set a custom expiration date and time when creating temporary policies. Selecting Custom in the duration dropdown activates a mandatory datetime picker that only allows future dates.

Configuration options include the TEMPORARY_POLICY_MAX_MS environment variable to control the maximum policy duration (default: 1 hour). Setting this to -1 removes the duration limit.

Discover more about these updates in our documentation.

Helm charts

Artifact Hub updates

- Added values schema support for improved chart validation

- Charts are now signed for enhanced security and trust

- Publisher identity is verified to ensure authenticity

- Charts are marked as official for trusted, curated content

Container security improvements

The container security context has been tightened by disabling privilege escalation (AllowPrivilegeEscalation=false), running the container as a non-root user (UID 1001), disallowing privileged mode, and dropping all Linux capabilities.

Release v94.3 Changelog

See the Factor House Product Roadmap to understand current delivery priorities.

Kpow v94.3 Changelog

See the full Kpow Changelog for information on previous releases

- Add BYO AI models

- Topic serdes inference

- Add 'Auto' deserializer to data inspect

- Add optional infer topic serdes job to populate Kpow's UI with topic serdes information

- Data inspect:

- Add kJQ AI filter generation

- Improve kJQ: chained alternatives, string/array slices, UUID type support

- Add new Event log UI element to capture any data inspect anomalies

- Report any process assignment exceptions to the frontend (previously only logged on the server)

- UI overhaul: new secondary button style for toolbar actions

- Add send to kREPL feature

- User interface:

- Make Dark mode the default theme

- Update temporary policies

- Support temporary policies of any duration (configurable, default max still 7 days)

- Better error messages in the UI

- Kafka Provider Integration

- Add support for GCP Managed Kafka Schema Registry integration

- Error handling:

- Improved error logging for scheduled mutations

- Improved error messaging for stopped connectors

- Code editor: improve keybinding support

- Webhooks: add support for Microsoft Teams and generic HTTP

- Helm Charts:

- Official, signed charts with verified publisher status and values schema validation!

- Improve container security: explicitly set

allowPrivilegeEscalation: falseand enforce additional hardening through strictsecurityContextsettings.

Flex v94.3 Changelog

See the full Flex Changelog for information on previous releases

- Make Dark mode the default theme

- Fix job heat-map tooltip saying "in a minute"

- Webhooks: add support for Microsoft Teams and generic HTTP

- Helm Charts:

- Official, signed charts with verified publisher status and values schema validation!

- Improve container security: explicitly set

allowPrivilegeEscalation: falseand enforce additional hardening through strictsecurityContextsettings.

Release 95.3: Memory leak fix for in-memory compute users

95.3 fixes a memory leak in our in-memory compute implementation, reported by our customers.

Release 95.2: quality-of-life improvements across Kpow, Flex & Helm deployments

95.2 focuses on refinement and operability, with improvements across the UI, consumer group workflows, and deployment configuration. Alongside bug fixes and usability improvements, this release adds new Helm options for configuring the API and controlling service account credential automounting.

Release 95.1: A unified experience across product, web, docs and licensing

95.1 delivers a cohesive experience across Factor House products, licensing, and brand. This release introduces our new license portal, refreshed company-wide branding, a unified Community License for Kpow and Flex, and a series of performance, accessibility, and schema-related improvements.

Start your streaming transformation today.

Try both Kpow or Flex free for 30 days with a single license - no credit card required.