How-to

Empowering engineers with everything they need to build, monitor, and scale real-time data pipelines with confidence.

.webp)

Manage Kafka Consumer Offsets with Kpow

Kpow version 94.2 enhances consumer group management capabilities, providing greater control and visibility into Kafka consumption. This article provides a step-by-step guide on how to manage consumer offsets in Kpow.

.webp)

Set Up Kpow with Confluent Cloud

A step-by-step guide to configuring Kpow with Confluent Cloud resources including Kafka clusters, Schema Registry, Kafka Connect, and ksqlDB.

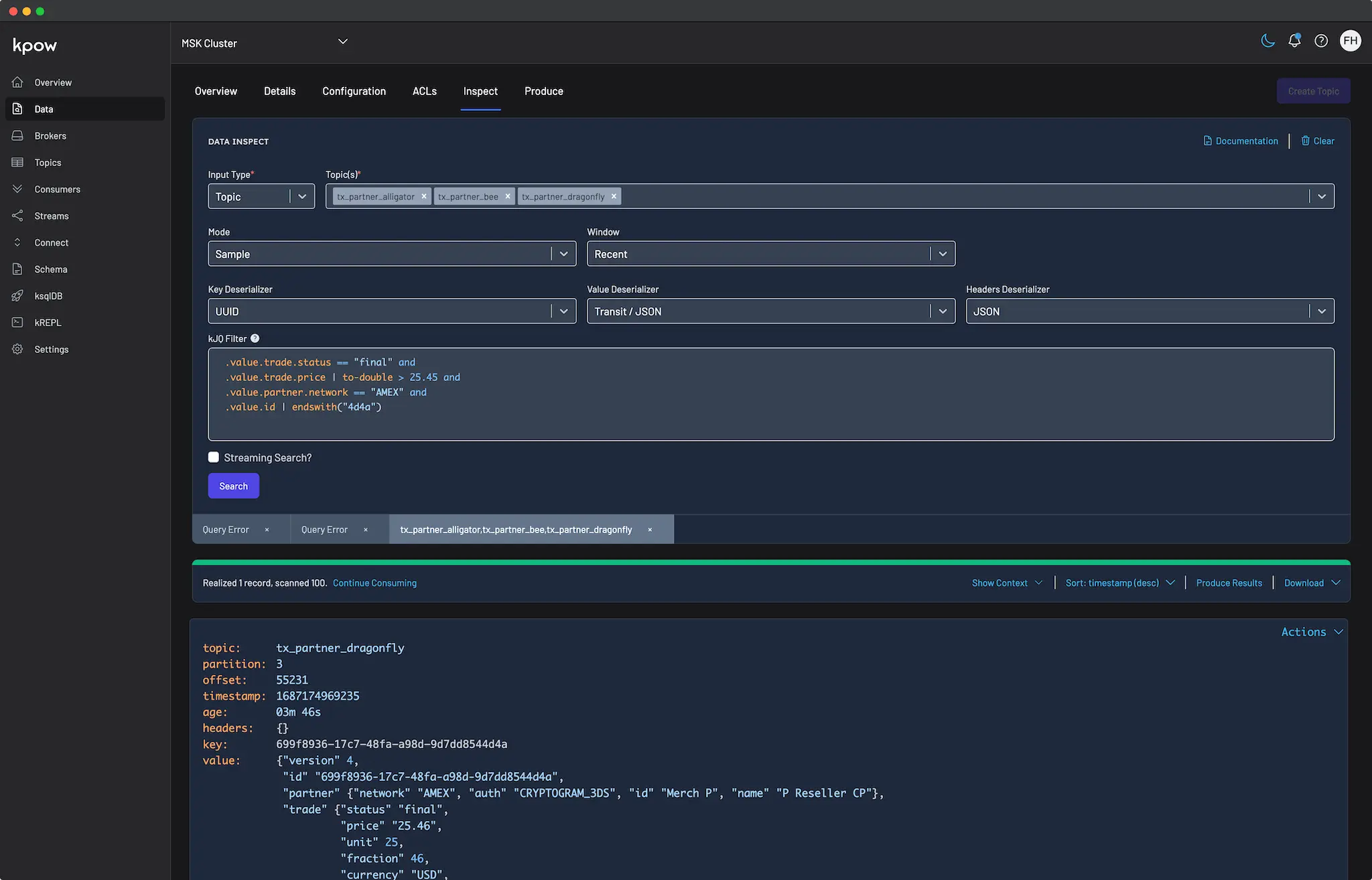

How to query a Kafka topic

Querying Kafka topics is a critical task for engineers working on data streaming applications, but it can often be a complex and time-consuming process. Enter Kpow's data inspect feature—designed to simplify and optimize Kafka topic queries, making it an essential tool for professionals working with Apache Kafka.

Delete Records in Kafka

This article provides a step-by-step guide on the various ways to delete records in Kafka.

Manage Kafka Visibility with Multi-Tenancy

This article teaches you how to configure Kpow to restrict visibility of Kafka resources with Multi-Tenancy.

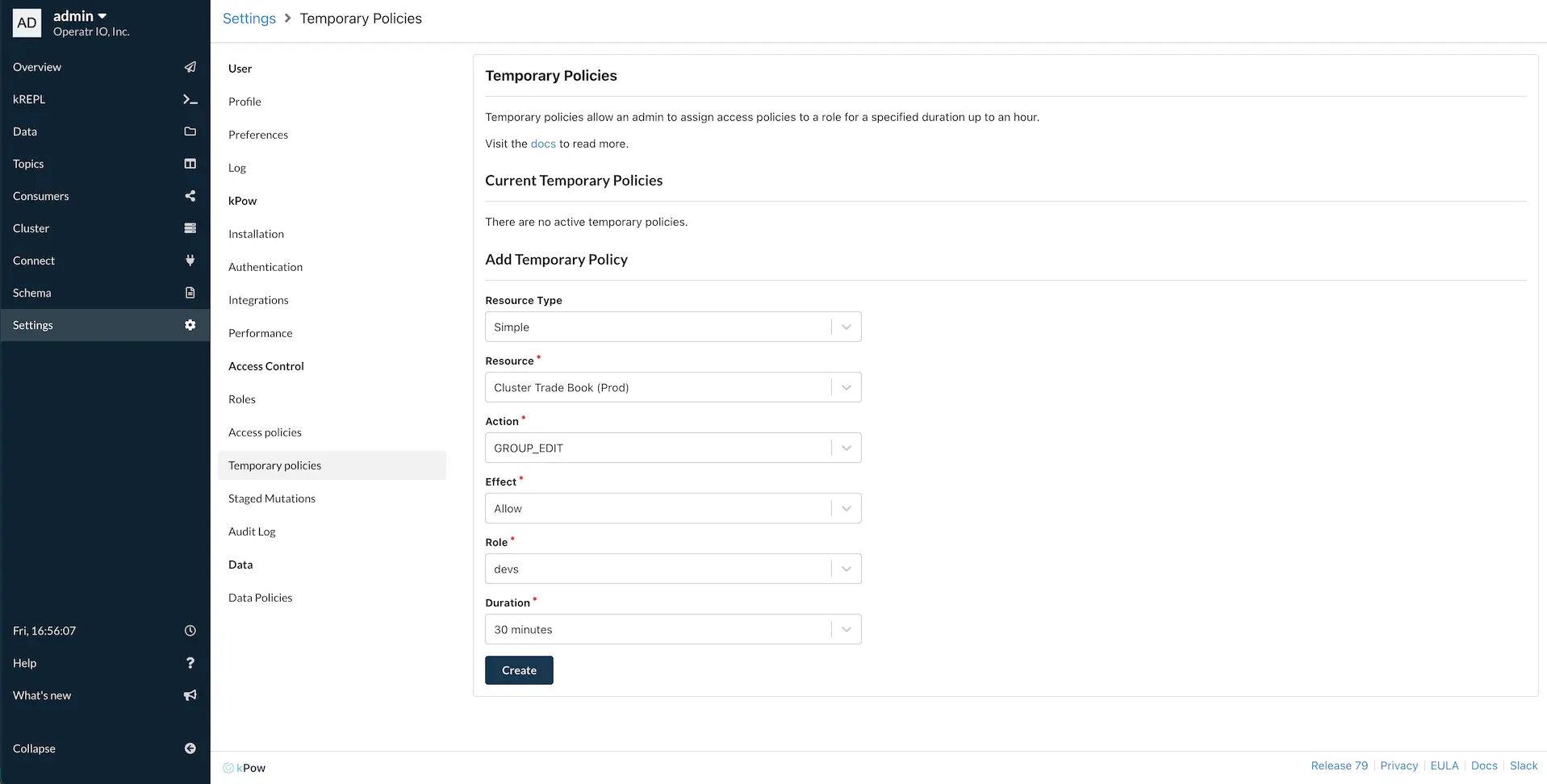

Manage Temporary Access to Kafka Resources

Temporary policies allow Admins the ability to assign access control policies for a fixed duration. This blog post introduces temporary policies with an all-to-common real-world scenario.

Join the Factor Community

We’re building more than products, we’re building a community. Whether you're getting started or pushing the limits of what's possible with Kafka and Flink, we invite you to connect, share, and learn with others.