Developer

Knowledge Center

Empowering engineers with everything they need to build, monitor, and scale real-time data pipelines with confidence.

Release 95.2: quality-of-life improvements across Kpow, Flex & Helm deployments

95.2 focuses on refinement and operability, with improvements across the UI, consumer group workflows, and deployment configuration. Alongside bug fixes and usability improvements, this release adds new Helm options for configuring the API and controlling service account credential automounting.

Highlights

Release 95.2: quality-of-life improvements across Kpow, Flex & Helm deployments

95.2 focuses on refinement and operability, with improvements across the UI, consumer group workflows, and deployment configuration. Alongside bug fixes and usability improvements, this release adds new Helm options for configuring the API and controlling service account credential automounting.

Integrate Kpow with Oracle Compute Infrastructure (OCI) Streaming with Apache Kafka

Unlock the full potential of your dedicated OCI Streaming with Apache Kafka cluster. This guide shows you how to integrate Kpow with your OCI brokers and self-hosted Kafka Connect and Schema Registry, unifying them into a single, developer-ready toolkit for complete visibility and control over your entire Kafka ecosystem.

Unified community license for Kpow and Flex

The unified Factor House Community License works with both Kpow Community Edition and Flex Community Edition, meaning one license will unlock both products. This makes it even simpler to explore modern data streaming tools, create proof-of-concepts, and evaluate our products.

All Resources

.webp)

Kpow Custom Serdes and Protobuf v4.31.1

This post explains an update in the version of protobuf libraries used by Kpow, and a possible compatibility impact this update may cause to user defined Custom Serdes.

.webp)

Set Up Kpow with Instaclustr Platform

This guide demonstrates how to set up Kpow with Instaclustr using a practical example. We deploy a Kafka cluster with the Karapace Schema Registry add-on and a Kafka Connect cluster, then use Kpow to deploy custom connectors and manage an end-to-end data pipeline.

.webp)

Introducing Webhook Support in Kpow

This guide demonstrates how to enhance Kafka monitoring and data governance by integrating Kpow's audit logs with external systems. We provide a step-by-step walkthrough for configuring webhooks to send real-time user activity alerts from your Kafka environment directly into collaboration platforms like Slack and Microsoft Teams, streamlining your operational awareness and response.

Release 94.5: New Factor House docs, enhanced data inspection and URP & KRaft improvements

This release introduces a new unified documentation hub - Factor House Docs. It also introduces major data inspection enhancements, including comma-separated kJQ Projection expressions, in-browser search, and over 15 new kJQ transforms and functions. Further improvements include more reliable cluster monitoring with improved Under-Replicated Partition (URP) detection, support for KRaft improvements, the flexibility to configure custom serializers per-cluster, and a resolution for a key consumer group offset reset issue.

.webp)

Enhanced Under-Replicated Partition Detection in Kpow

Kpow now offers enhanced under-replicated partition (URP) detection for more accurate Kafka health monitoring. Our improved calculation correctly identifies URPs even when brokers are offline, providing a true, real-time view of your cluster's fault tolerance. This helps you proactively mitigate risks and ensure data durability.

.webp)

Introducing Factor House Docs

We're excited to launch the new Factor House Docs, a unified hub for all our product documentation. Discover key improvements like a completely new task-based structure, interactive kJQ examples, and powerful search, all designed to help you find the information you need, faster than ever. Explore the new home for all things Kpow, Flex, Factor Platform and more.

Events & Webinars

Stay plugged in with the Factor House team and our community.

Sydney Workshop: Building Resilient Event-Driven Systems with Kafka and Flink

We're teaming up with NetApp Instaclustr and Ververica to run this intensive half-day workshop where you'll design, build, and operate a complete real-time operational system from the ground up.

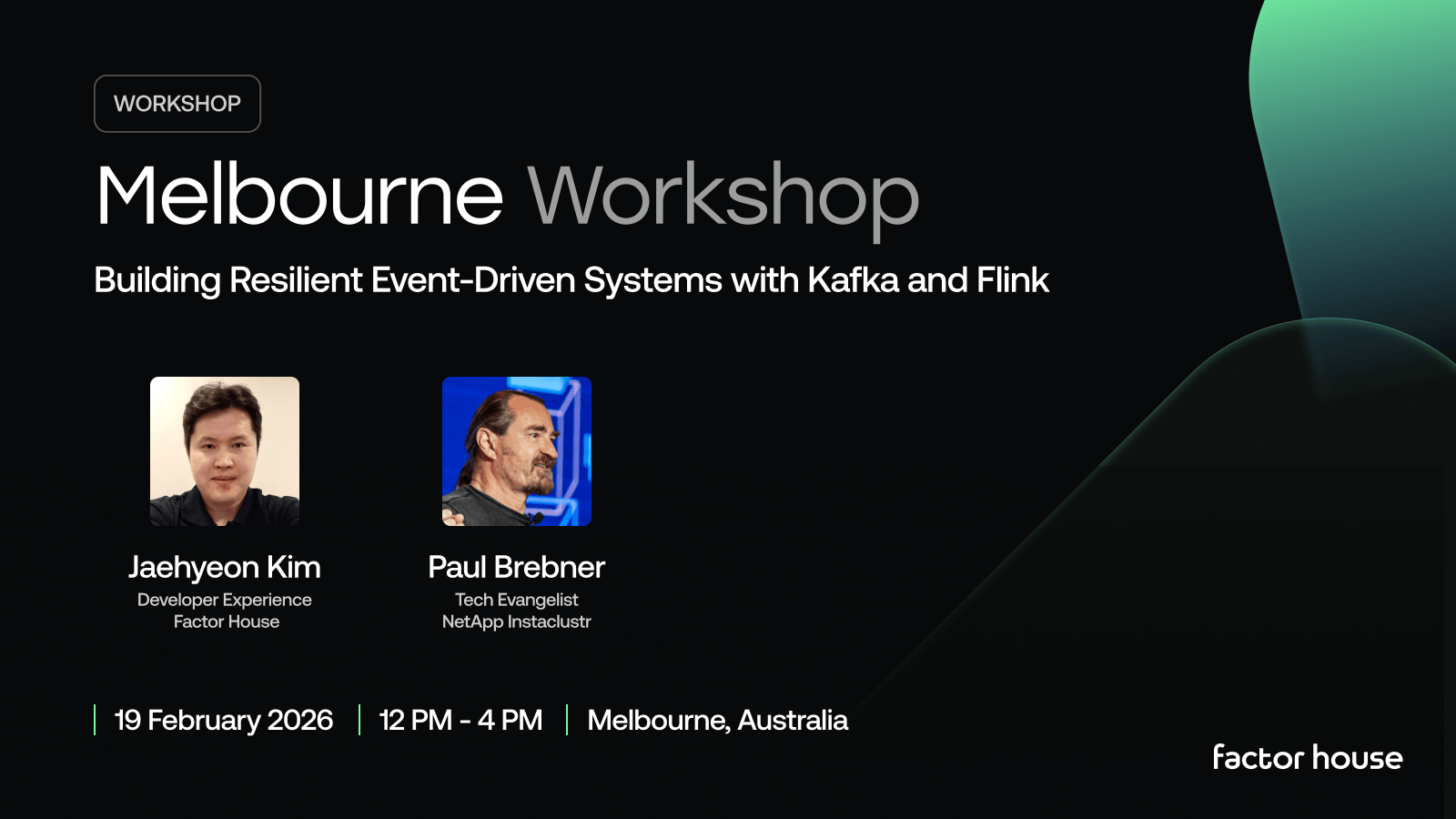

Melbourne Workshop: Building Resilient Event-Driven Systems with Kafka and Flink

We're teaming up with NetApp Instaclustr and Ververica to run this intensive half-day workshop where you'll design, build, and operate a complete real-time operational system from the ground up.

Join the Factor Community

We’re building more than products, we’re building a community. Whether you're getting started or pushing the limits of what's possible with Kafka and Flink, we invite you to connect, share, and learn with others.