Developer

Knowledge Center

Empowering engineers with everything they need to build, monitor, and scale real-time data pipelines with confidence.

Release 95.2: quality-of-life improvements across Kpow, Flex & Helm deployments

95.2 focuses on refinement and operability, with improvements across the UI, consumer group workflows, and deployment configuration. Alongside bug fixes and usability improvements, this release adds new Helm options for configuring the API and controlling service account credential automounting.

Highlights

Release 95.2: quality-of-life improvements across Kpow, Flex & Helm deployments

95.2 focuses on refinement and operability, with improvements across the UI, consumer group workflows, and deployment configuration. Alongside bug fixes and usability improvements, this release adds new Helm options for configuring the API and controlling service account credential automounting.

Integrate Kpow with Oracle Compute Infrastructure (OCI) Streaming with Apache Kafka

Unlock the full potential of your dedicated OCI Streaming with Apache Kafka cluster. This guide shows you how to integrate Kpow with your OCI brokers and self-hosted Kafka Connect and Schema Registry, unifying them into a single, developer-ready toolkit for complete visibility and control over your entire Kafka ecosystem.

Unified community license for Kpow and Flex

The unified Factor House Community License works with both Kpow Community Edition and Flex Community Edition, meaning one license will unlock both products. This makes it even simpler to explore modern data streaming tools, create proof-of-concepts, and evaluate our products.

All Resources

.png)

Introduction to Factor House Local

Jumpstart your journey into modern data engineering with Factor House Local. Explore pre-configured Docker environments for Kafka, Flink, Spark, and Iceberg, enhanced with enterprise-grade tools like Kpow and Flex. Our hands-on labs guide you step-by-step, from building your first Kafka client to creating a complete data lakehouse and real-time analytics system. It's the fastest way to learn, prototype, and build sophisticated data platforms.

%20(1).webp)

Integrate Kpow with Google Managed Schema Registry

Kpow 94.3 now integrates with Google Cloud's managed Schema Registry, enabling native OAuth authentication. This guide walks through the complete process of configuring authentication and using Kpow to create, manage, and inspect data validated against Avro schemas.

.webp)

Improvements to Data Inspect in Kpow 94.3

Kpow's 94.3 release is here, transforming how you work with Kafka. Instantly query topics using plain English with our new AI-powered filtering, automatically decode any message format without manual setup, and leverage powerful new enhancements to our kJQ language. This update makes inspecting Kafka data more intuitive and powerful than ever before.

Release 94.4: Auto SerDes improvements

This minor hotfix release from Factor House resolves a bug when using Auto SerDes without Data policies, and adds support for UTF-8 String Auto SerDes inference.

Release 94.3: BYO AI, Topic data inference, and Data inspect improvements

This minor release from Factor House introduces BYO AI model support, topic data inference, and major enhancements to data inspect—such as AI-powered filtering, new UI elements, and AVRO date formatting. It also adds integration with GCP Managed Kafka Schema Registry, improved webhook support, updated policy handling, and several UI and error-handling improvements.

Ensuring Your Data Streaming Stack Is Ready for the EU Data Act

The EU Data Act takes effect in September 2025, introducing major implications for teams running Kafka. This article explores what the Act means for data streaming engineers, and how Kpow can help ensure compliance — from user data access to audit logging and secure interoperability.

Events & Webinars

Stay plugged in with the Factor House team and our community.

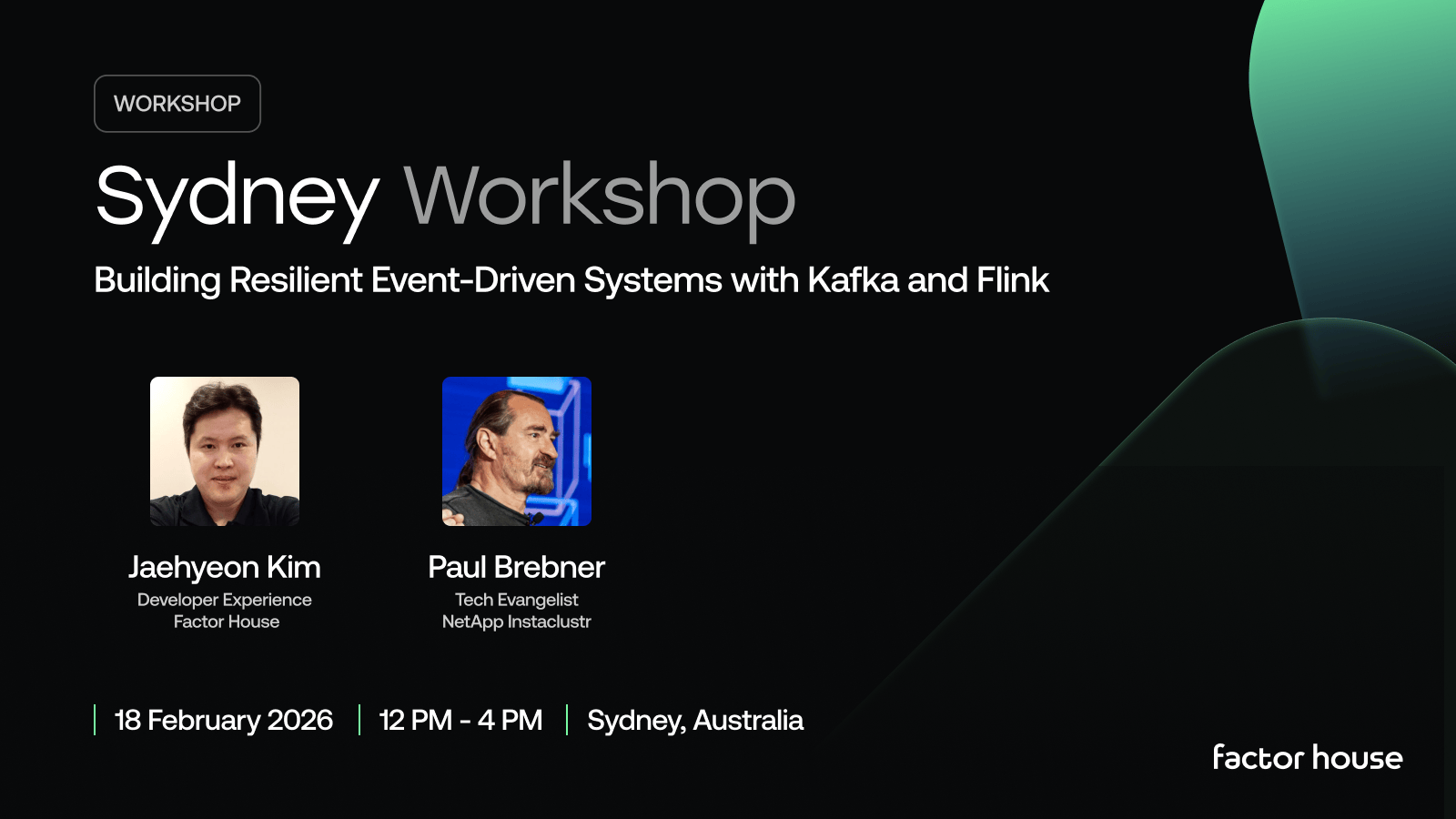

Sydney Workshop: Building Resilient Event-Driven Systems with Kafka and Flink

We're teaming up with NetApp Instaclustr and Ververica to run this intensive half-day workshop where you'll design, build, and operate a complete real-time operational system from the ground up.

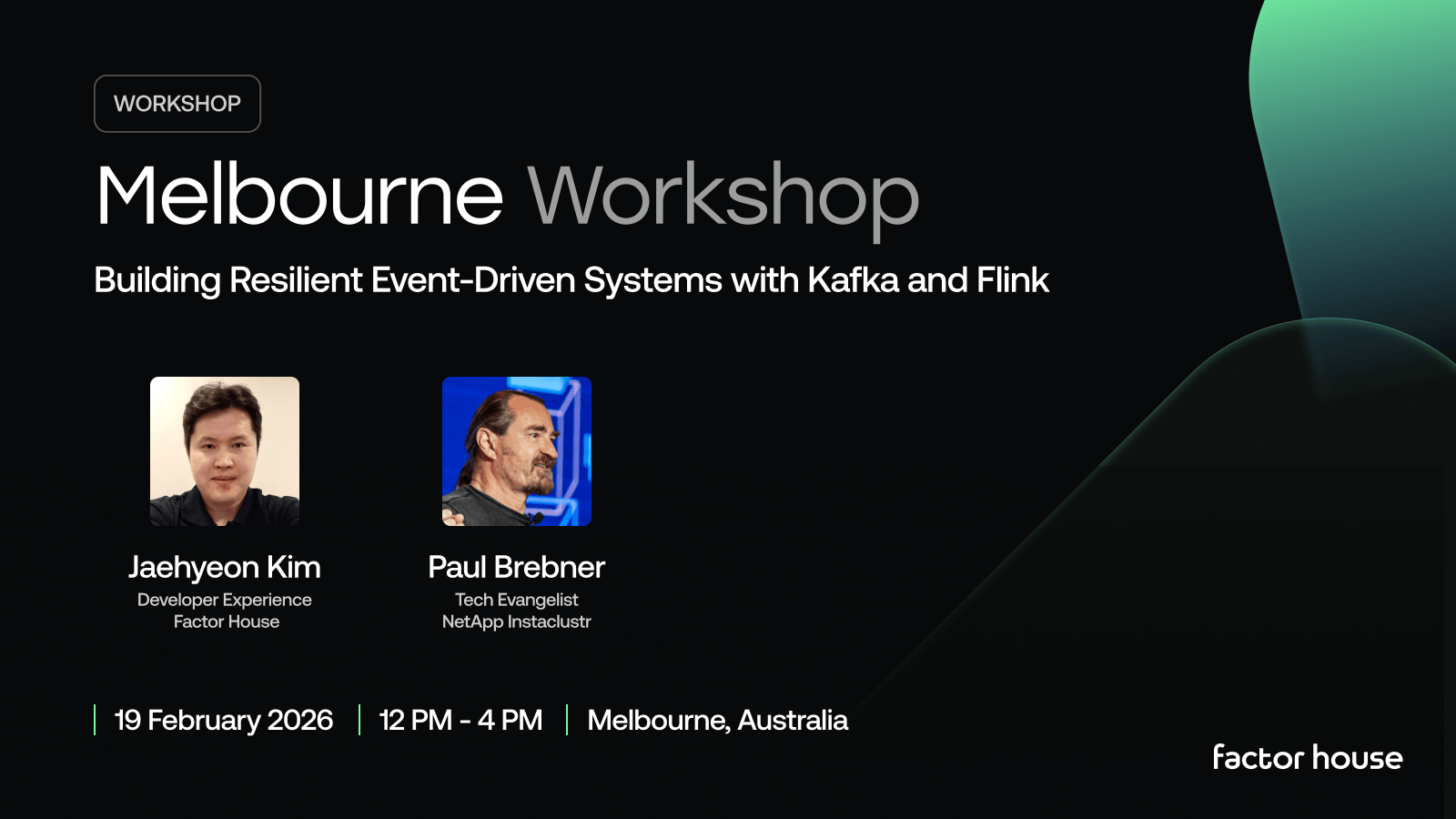

Melbourne Workshop: Building Resilient Event-Driven Systems with Kafka and Flink

We're teaming up with NetApp Instaclustr and Ververica to run this intensive half-day workshop where you'll design, build, and operate a complete real-time operational system from the ground up.

Join the Factor Community

We’re building more than products, we’re building a community. Whether you're getting started or pushing the limits of what's possible with Kafka and Flink, we invite you to connect, share, and learn with others.